人工智慧產業正面臨關鍵的基礎設施瓶頸。訓練大型語言模型需要龐大計算資源,邊緣裝置以指數速度增加,而 GPU 稀缺已成為 AI 時代的核心限制。同時,傳統雲端服務供應商一方面難以滿足暴增需求,一方面仍維持其在存取與定價上的壟斷控制。

Over 50% of generative AI companies report GPU shortages 視 GPU 短缺為擴張營運的主要障礙。AI computing power is expected to increase by roughly 60 times by the end of 2025 ,相較於 2023 年第一季將成長約 60 倍。這場運算軍備競賽,為加密協議提出去中心化替代方案打開了空間。

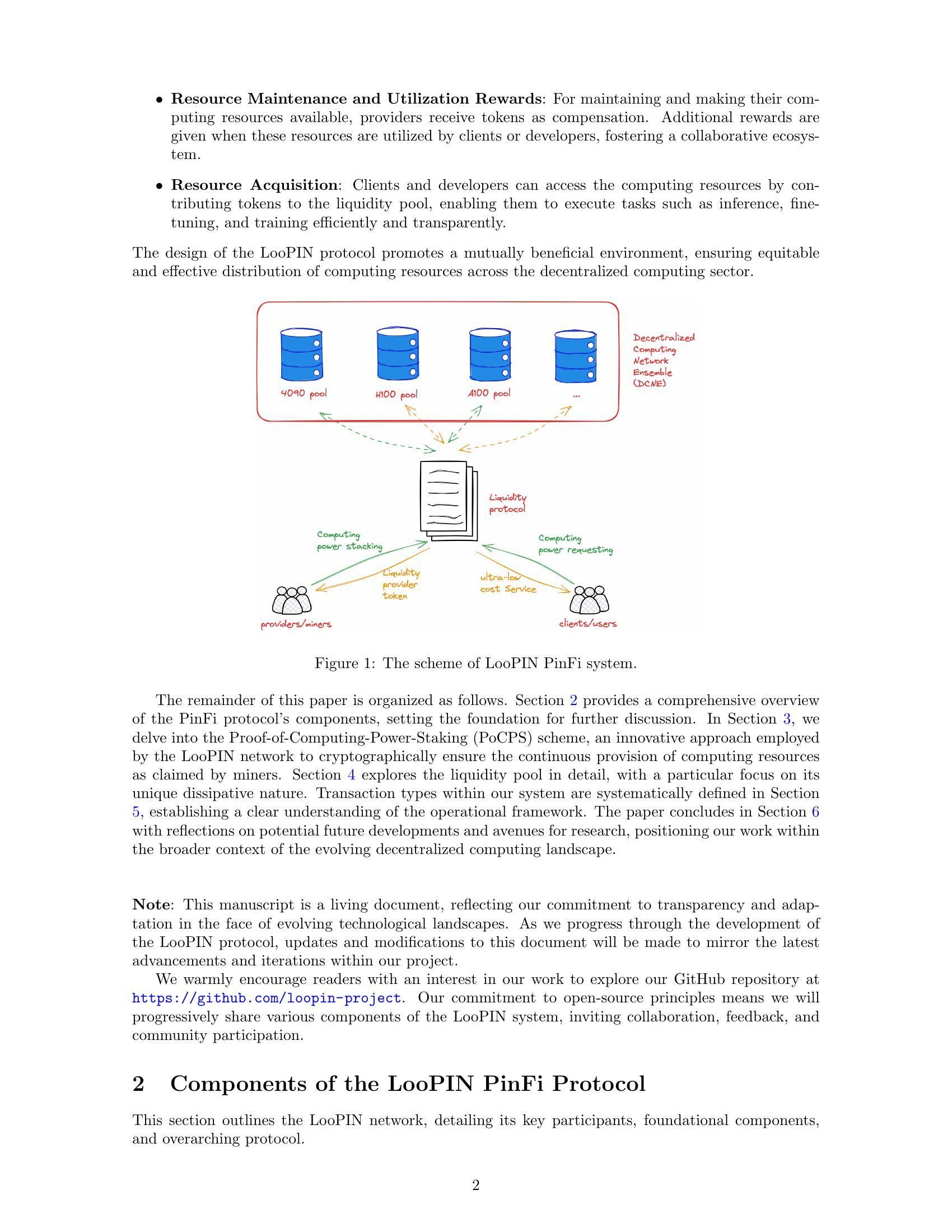

於是出現了 Physical Infrastructure Finance(實體基礎設施金融),簡稱 PinFi。這個新興框架,將算力視為一種可代幣化資產,能在區塊鏈網路中被交易、質押與變現。PinFi 協議不再依賴集中式資料中心,而是把獨立營運者、遊戲機、挖礦場與邊緣裝置的閒置 GPU 能力聚合起來,形成分散式市集,供全球 AI 開發者存取。

下文將探討真實算力如何轉化為加密經濟基礎設施,理解代幣化算力網路的運作機制、激勵參與的經濟模型、支撐驗證與結算的架構,以及這一切對加密產業與 AI 產業的潛在影響。

為何是現在的 PinFi?宏觀與技術驅動因素

AI 產業面臨的算力瓶頸,源自根本性的供給限制。Nvidia allocated nearly 60% of its chip production to enterprise AI clients in Q1 2025,使得許多使用者為取得資源而手忙腳亂。The global AI chip market reached $123.16 billion in 2024,預計 2029 年將達到 3,115.8 億美元,顯示需求爆炸性成長,遠超過製造產能。

GPU 稀缺以多種形式表現出來。傳統雲端服務商對高階 GPU 執行個體設有候補清單。AWS charges $98.32 per hour for an 8-GPU H100 instance,此類定價讓許多開發者與新創公司難以負擔進階 AI 能力。由於供給吃緊,硬體價格居高不下,HBM3 pricing rising 20-30% year-over-year。

計算能力集中在少數大型雲端供應商之手,也帶來額外摩擦。By 2025, analysts say over 50% of enterprise workloads will run in the cloud,但存取仍受到合約條件、地理位置限制與 KYC 規定所約束。這種集中化抑制創新,並讓關鍵基礎設施出現單點故障風險。

與此同時,大量計算資源處於閒置狀態。遊戲機在上班時間大多沒有被使用。隨著挖礦經濟改變,加密礦工尋找新的收益來源。資料中心在離峰時段保留了多餘容量。The decentralized compute market has grown from $9 billion in 2024,預測到 2032 年將達 1,000 億美元,顯示市場認同分散式模式有能力釋放這些潛在供給。

區塊鏈技術與實體基礎設施的交會,已透過 DePIN(Decentralized Physical Infrastructure Networks,去中心化實體基礎設施網路)趨於成熟。DePIN 協議利用代幣激勵,協調真實世界基礎設施的部署與運作。Messari identified DePIN's total addressable market at $2.2 trillion,到 2028 年有望達 3.5 兆美元。

PinFi 則是把 DePIN 原則專門應用在算力基礎設施上。它把計算資源視為可代幣化、能透過實際使用產生收益的資產。此框架將算力從「向集中式供應商租用的服務」,轉變為「在開放、無許可市集中交易的商品」。

什麼是 PinFi 與代幣化算力?

Physical Infrastructure Finance 定義了一種模型,將實體計算資產以數位代幣形式呈現在區塊鏈上,使得所有權、營運與變現都可以去中心化進行。與只處理純數位資產的傳統 DeFi 協議不同,PinFi 在鏈上經濟系統與鏈下實體資源之間建立橋樑。

Academic research defines tokenization 為「將權利、資產所有權單位、債權,甚至實體資產,轉換為區塊鏈上的數位代幣的過程」。對於算力資源而言,這代表個別 GPU、伺服器叢集或邊緣裝置,會被對應成代幣,用以追蹤其容量、可用性與使用狀況。

PinFi 與一般基礎設施金融或典型 DeFi 協議有根本差異。傳統基礎設施金融,多為對大型資本建設進行長期債務或股權投資。DeFi 協議則主要聚焦於加密原生資產的交易、借貸或收益產生。PinFi 位於兩者交會處,運用加密經濟激勵,協調真實世界的計算資源,同時維持鏈上的結算與治理。

多個協議展現了 PinFi 模型。Bittensor operates as a decentralized AI network,參與者向專門處理特定任務的子網路貢獻機器學習模型與算力。TAO 代幣根據參與者為整體網路「集體智慧」提供的資訊價值給予獎勵。With over 7,000 miners 提供算力,Bittensor 建立了 AI 推論與模型訓練的市場。

Render Network aggregates idle GPUs globally,處理分散式 GPU 繪圖任務。它原本專注於為藝術家與內容創作者提供 3D 渲染,如今已擴展至 AI 運算工作負載。RNDR 代幣作為渲染作業的支付工具,同時獎勵貢獻 GPU 容量的供應者。

Akash Network operates as a decentralized cloud marketplace,利用資料中心的閒置容量。透過反向拍賣機制,算力需求方提出規格,供應方競價提供資源。AKT 代幣用於治理、質押與網路結算。Akash witnessed notable surge in quarterly active leases,在將重心從傳統 CPU 擴展到 GPU 後,活躍租賃量顯著成長。

io.net has aggregated over 300,000 verified GPUs,整合了獨立資料中心、加密礦工,以及包括 Render 與 Filecoin 在內的其他 DePIN 網路資源。該平台專注於 AI 與機器學習工作負載,讓開發者能在數分鐘內於 130 個國家部署 GPU 叢集。

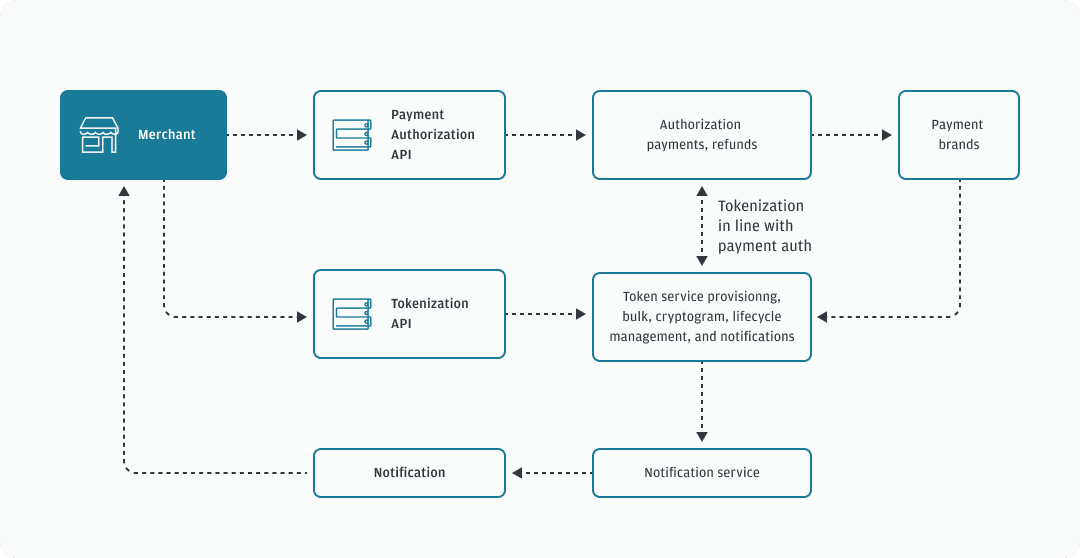

在這些協議中,代幣化算力的運作機制有一致的模式。算力供應者先在網路上註冊其硬體,並經過驗證流程,以確認其容量與能力。智慧合約管理供給與需求的關係,依照需求、價格與地理限制,將算力工作分派給可用節點。代幣獎勵同時激勵硬體提供與高品質服務。

價值生成來自實際使用,而非純粹投機。當 AI 開發者使用分散式 GPU 資源訓練模型時,付款會流向實際執行運算工作的硬體供應者。算力因此成為能產生收益的生產性資產,類似權益證明(PoS)驗證人因保障網路安全而獲得獎勵。這建立了永續的經濟基礎,使代幣價值與網路效用高度連動。

基礎設施架構:節點、市集與結算

支撐代幣化算力的架構,需在多個層次上進行協調。最底層是獨立算力供應者所組成的網路,他們部署硬體、向協議註冊,並將容量釋放出來供租用。這些供應者可能是擁有遊戲 PC 的個人、專業資料中心營運商,或想尋求額外收入來源的加密挖礦營運。

節點佈建從算力供應者把硬體連上網路開始。Protocols like io.net support diverse GPU types,從消費級的 NVIDIA RTX 4090,到企業級的 H100 與 A100。供應者安裝客戶端軟體,將自身容量暴露給網路的編排層,同時維持安全邊界,避免未經授權的存取。

驗證機制確保宣稱的容量與實際能力相符。有些協議會使用加密式運算證明(proof of compute),透過讓節點在可驗證條件下執行特定工作,來檢驗其性能與可用性。 nodes 必須證明自己正確執行了特定計算。Bittensor 使用其 Yuma 共識機制,由驗證者評估礦工所產出的機器學習結果品質,並給予分數以決定獎勵分配。提供低品質結果或企圖作弊的節點,會獲得較低報酬,甚至面臨質押代幣被削減的懲罰。

延遲基準測試有助於將工作負載對應到合適的硬體。AI 推理所需的效能特性,與模型訓練或 3D 繪圖不同。地理位置會影響邊緣運算應用的延遲,在這類應用中,處理必須靠近資料來源進行。邊緣運算市場在 2024 年達到 236.5 億美元,預計到 2033 年將成長至 3277.9 億美元,主要由在地化處理需求所驅動。

市集層負責將運算需求與供給連結起來。當開發者需要 GPU 資源時,他們會指定需求,包括運算能力、記憶體、使用期間與可接受的最高價格。Akash 採用反向拍賣模型,由部署方先設定條件,再由供應者出價競標合約。Render 使用動態定價演算法,依據網路使用率與市場狀況調整費率。

作業路由演算法會在可用節點之間最佳化運算任務的配置。考量因素包括硬體規格、當前使用率、地理位置接近程度、歷史表現以及價格。io.net 的協調層負責處理容器化工作流程,並支援以 Ray 為原生的分散式機器學習編排。

結算則透過鏈上的智慧合約進行,合約會託管付款資金,並在工作完成且經過驗證後釋放款項。這種無信任結算消除了交易對手風險,同時支援短時運算作業的微支付。建立在高吞吐量區塊鏈(如 Solana)上的協議,能處理數千筆同時進行的推理請求所產生的交易量。

質押機制讓參與者之間的激勵相互對齊。運算供應者通常會質押代幣,以展現承諾,並提供可在表現不佳時被削減的抵押品。Bittensor 中的驗證者會質押 TAO 代幣,以獲得評分礦工的影響力,並分享區塊獎勵的一部分。代幣持有人可以將質押委託給他們信任的驗證者,類似權益證明共識機制。

治理則讓代幣持有人可以對協議參數進行投票,包括獎勵分配、費用結構與網路升級。去中心化治理確保沒有任何中央權威能單方面更改規則或限制存取,維持這些網路與傳統雲端服務供應商不同的無許可特性。

這種架構與中心化雲端運算形成鮮明對比。大型供應商擁有自己的基礎設施,在缺乏真正市場競爭的情況下自行訂價,要求用戶註冊帳號與通過合規審查,並掌控存取與審查權。PinFi 協議則將所有權分散到數千名獨立營運者之間,實現透明的市場化定價,以無許可方式運作,並透過去中心化來抵抗審查。

代幣經濟與激勵模型

代幣經濟提供協調分散式運算網路的激勵結構。原生代幣具有多重功能,包括支付服務費用、獎勵資源提供者、治理權利,以及參與網路所需的質押要求。

發行機制決定代幣如何進入流通。Bittensor 採用與比特幣相同的模式,將 TAO 代幣供給上限設定為 2100 萬枚,並透過週期性減半逐步降低發行量。目前每日鑄造 7200 枚 TAO,在貢獻運算資源的礦工與維護網路品質的驗證者之間分配。這創造了類似比特幣的稀缺性,同時將通膨導向具生產力的基礎設施。

其他協議則根據網路使用情況發行代幣。當運算任務執行時,新鑄造的代幣會依照供應者所貢獻的資源比例流向他們。這種將價值創造與代幣發行直接連結的模式,確保通膨獎勵的是實際生產力,而非被動持幣行為。

質押讓網路參與者真正「身處局中」。運算提供者會質押代幣以註冊節點並展現承諾。若表現不佳或企圖詐欺,就會觸發削減機制,被質押的代幣會被銷毀或重新分配給受影響方。這種經濟懲罰為提供可靠服務與誠實行為創造誘因。

驗證者會質押更大量的代幣,以在品質評估與治理決策上取得更大影響力。在 Bittensor 的模型中,驗證者會評估礦工輸出,並提交權重矩陣,指出哪些節點做出有價值貢獻。Yuma 共識會依驗證者質押權重聚合這些評估,來決定最終獎勵分配。

運算代幣的供需動態在兩個層面運作。在供給端,愈多節點加入網路,就愈能提升可用計算能力。代幣獎勵必須足以補償硬體成本、電力與設備其他替代用途的機會成本。當代幣價格上漲,提供運算資源會變得更有利可圖,進而吸引更多供給。

在需求端,代幣價格反映使用者對網路存取價值的評估。隨著 AI 應用激增、運算資源愈發稀缺,使用者願意為去中心化資源支付更高費用。AI 硬體市場預計將從 2025 年的 668 億美元成長到 2034 年的 2963 億美元,為替代性運算來源創造持續需求。

代幣價值升值有利於所有參與者。硬體供應者在提供相同運算輸出的情況下,可獲得更多報酬。早期節點營運者則能從累積獎勵的升值中受益。開發者則可受惠於相對於昂貴中心化供應商的去中心化替代方案。質押或提供流動性的代幣持有人,則能從網路活動中取得手續費收入。

風險模型用來處理潛在失敗模式。節點停機會降低收益,因為任務會被路由到其他可用節點。地理集中則會為需要在地處理的邊緣應用帶來延遲問題。網路效應傾向於有利於硬體類型與地理分布更為多樣、規模更大的協議。

代幣通膨必須在吸引新增供給與維持既有持幣者價值之間取得平衡。針對去中心化基礎設施協議的研究指出,可持續的代幣經濟設計,需要需求成長速度超過供給增加。協議會實作銷毀機制,將用於支付的代幣永久從流通中移除,製造抵消通膨發行的通縮壓力。

費用結構在各網路間有所不同。有些直接向使用者收取原生代幣。有些則接受穩定幣或主流加密貨幣的包裹版本,而協議代幣主要用於治理與質押功能。混合模型則以代幣作為網路存取憑證,同時以穩定資產結算實際運算付款,以降低價格波動風險。

隨著協議不斷嘗試各種平衡利害關係人與維持長期成長的方法,激勵模型的設計空間仍在持續演化中。

AI、邊緣與真實世界基礎設施

代幣化運算網路讓應用程式得以利用分散式硬體來處理 AI 工作負載、邊緣運算與特殊基礎設施需求。多元的使用情境展現出去中心化模型如何在整個運算堆疊中紓解瓶頸。

分散式 AI 模型訓練是主要應用之一。訓練大型語言模型或電腦視覺系統需要在多張 GPU 之間進行大規模平行運算。傳統作法是將這些訓練集中在大型雲端供應商所擁有的資料中心。去中心化網路則讓訓練能在地理上分散的節點上進行,每個節點都貢獻一部分運算工作,並透過區塊鏈驅動的協調機制來統一管理。

Bittensor 的子網路架構支援針對特定任務的專門 AI 市場,例如文字生成、影像合成或資料擷取。礦工會在各自選定的領域中競爭,提供高品質輸出,由驗證者進行績效評估並相應分配獎勵。這打造出競爭性市場,讓最佳模型與最高效實作能透過經濟選擇自然浮現。

邊緣運算工作負載特別受惠於去中心化基礎設施。全球邊緣運算市場在 2024 年的估值為 236.5 億美元,其成長動能來自於需要在靠近資料來源處進行處理的應用。低延遲與在地處理。產生連續感測器數據的 IoT 裝置需要即時分析,無法承受往返遠端資料中心的延遲。自駕車則需要在瞬間做出決策,無法容忍網路延遲。

去中心化運算網路可以將運算能力實體部署在資料來源附近。一家部署工業 IoT 感測器的工廠,可以租用同一城市或區域內的邊緣節點,而不是依賴數百英里外的集中式雲端。Industrial IoT applications accounted for the largest market share in edge computing in 2024,反映出在地化處理對製造與物流的關鍵重要性。

內容算繪與創意工作流程會消耗大量 GPU 資源。進行 3D 場景算繪的藝術家、製作動畫電影的動畫師,以及編譯遊戲資產的遊戲開發者,都需要高度平行運算。Render Network specializes in distributed GPU rendering,將創作者與全球閒置 GPU 能力連結在一起。這種市集模式一方面降低算繪成本,一方面讓 GPU 擁有者能在離峰時段獲得收益。

科學運算與研究應用在使用昂貴雲端資源時,經常面臨預算限制。學術機構、獨立研究者與中小型組織可以利用去中心化網路來執行模擬、分析資料集或訓練專門模型。其無許可特性意味著任何地理位置的研究者,都能在沒有機構雲端帳戶或信用審查的情況下存取運算資源。

遊戲與元宇宙平台需要進行算繪與物理運算,以提供沉浸式體驗。隨著虛擬世界日益複雜,維持持續存在的環境並支援數千名同時上線使用者的運算需求也隨之增加。分散在邊緣的運算節點可以為區域玩家族群提供在地處理,降低延遲,同時透過以代幣為誘因的供應者分攤基礎設施成本。

大規模 AI 推論需要持續的 GPU 存取,以提供已訓練模型的預測服務。為數百萬查詢提供服務的聊天機器人、處理使用者提示的圖像生成服務,或分析使用者行為的推薦引擎,都需要始終可用的運算能力。去中心化網路提供了冗餘與地理分散,相較於依賴單一供應商,更能提升可靠性。

在主要雲端供應商服務不足的地理區域,為 PinFi 協議帶來機會。資料中心密度不足的區域,在存取集中式基礎設施時會面臨更高延遲與成本。這些地區的在地硬體供應者,可以依照區域需求提供運算能力,透過代幣獎勵獲利,同時改善當地對 AI 能力的存取。

資料主權要求日益嚴格,越來越多規範要求特定工作負載必須在特定法域內處理。Regulations like the EU Data Act require sensitive information to be processed locally,促使部署符合資料駐留規定的邊緣基礎設施。去中心化網路天生就支援依法域部署節點,同時透過區塊鏈結算維持全球協調。

為何重要:對加密與基礎設施的影響

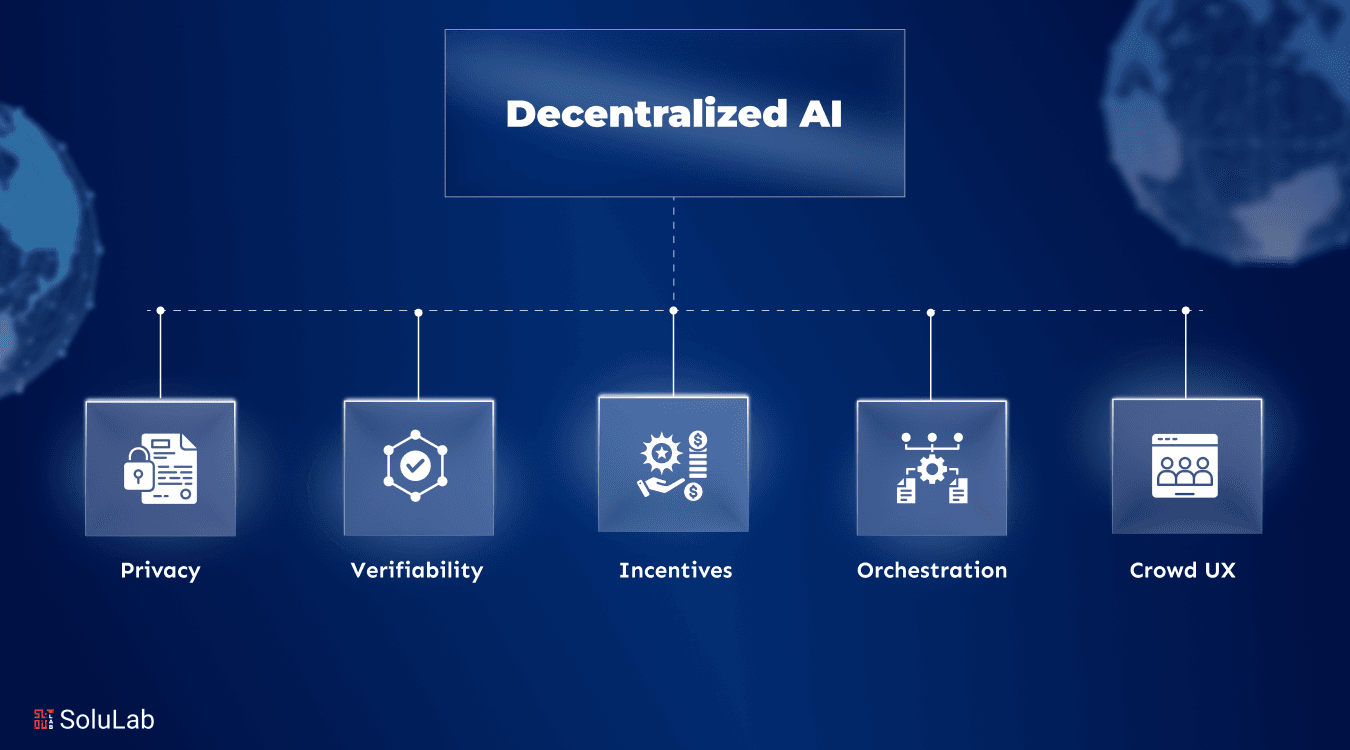

PinFi 的出現,代表加密技術從純金融應用拓展到現實世界基礎設施的協調。此一轉變對加密生態與更廣泛的運算產業都帶來重要影響。

當加密協議解決實際的基礎設施問題時,便展現出超越投機的實用性。DePIN 與 PinFi 創造出協調實體資源的經濟系統,證明區塊鏈誘因可以自舉現實世界的網路。The DePIN sector's total addressable market is currently around $2.2 trillion and could reach $3.5 trillion by 2028,約為目前整體加密市值的三倍。

運算存取的民主化,處理了 AI 發展中的一項根本不對稱。目前,先進 AI 能力大多集中在資金雄厚、能負擔龐大 GPU 叢集的科技公司。處於資源受限環境的新創、研究者與開發者,在參與 AI 創新的過程中面臨重重關卡。去中心化運算網路透過在市場決定價格下提供無許可存取的分散硬體,降低了這些門檻。

新資產類別的創造,拓展了加密投資版圖。運算能力代幣代表的是能透過現實世界使用產生收入之生產性基礎設施所有權。這與缺乏明確價值捕獲機制的純投機資產或治理代幣不同。代幣持有者本質上持有一家去中心化雲端供應商的股份,其價值與運算服務的需求緊密連動。

傳統基礎設施壟斷可能面臨顛覆。Centralized cloud providers including AWS, Microsoft Azure and Google Cloud maintain oligopolistic control over compute markets,能在缺乏直接競爭的情況下自行決定價格。去中心化替代方案引入了市場動態,讓數以千計的獨立供應者競爭,可能在提升可近性的同時壓低成本。

AI 產業可透過降低對集中式基礎設施的依賴而受惠。目前 AI 發展高度聚集在主要雲端供應商周圍,形成單點故障與集中風險。Over 50% of generative AI companies report GPU shortages as major obstacles。分散式網路提供了可吸收溢出需求的替代運算能力,並為供應鏈中斷提供冗餘備援。

透過更佳的容量利用率,有望帶來能源效率提升。閒置的遊戲機台在待機時會消耗電力,卻沒有產出。具有剩餘產能的挖礦作業則尋求額外的收益來源。Distributed networks put idle GPUs to productive use,提升整體運算生態系的資源使用效率。

審查阻抗能力對 AI 應用變得愈發重要。集中式雲端供應商可以拒絕為特定使用者、應用或整個地理區域提供服務。去中心化網路以無許可方式運作,使得 AI 的開發與部署無須獲得看門人批准。這對於具爭議性的應用,或位於管制嚴格法域的使用者而言格外重要。

資料隱私架構也能透過在地處理而改善。Edge computing keeps sensitive data near its source,而非將其傳送到遙遠的資料中心。去中心化網路可以實作像是聯邦學習等隱私保護技術,使模型在分散資料上訓練,而不需集中原始資訊。

市場效率則可透過透明的價格發現機制而提升。傳統雲端定價往往不透明,費率結構複雜,並仰賴議定的企業合約。去中心化市集為運算資源建立明確的即時價格,使開發者可以最佳化成本,供應者則能在競爭動態下最大化收益。

其長期重要性來自持續的需求驅動因素。隨著應用擴散,AI 工作負載將持續成長。The AI hardware market is expected to grow from $66.8 billion in 2025 to $296.3 billion by 2034。運算將持續是根本性瓶頸,確保對替代基礎設施模式的長期需求。

網路效應將偏向率先達到臨界規模的協議。隨著更多硬體供應者加入,可用資源的多樣性提升。地理分布改善,進而降低邊緣應用的延遲。較大的網路會吸引更多開發者,形成良性成長循環。特定領域的先行者可能建立長期優勢。

挑戰與風險

儘管應用前景可期,代幣化運算網路仍面臨重大的技術、經濟與監管挑戰,可能限制其成長或採用。

技術可靠性仍是主要顧慮。集中式雲端供應商提供保證運作時間與效能的服務等級協議 (SLA)。分散式網路則需協調來自獨立營運者、專業程度與基礎設施品質各異的硬體。節點故障、網路中斷或維護時段都會造成可用性缺口,必須透過冗餘與路由演算法來管理。

對實際完成工作的驗證,是持續存在的挑戰。確保節點誠實執行運算,而不是回傳錯誤結果,需要精密的證明系統。Cryptographic proofs of compute 會帶來額外開銷,但仍是防止詐欺所必須。若驗證機制不完備,惡意節點就可能在未提供承諾服務的情況下,謊稱完成運算並領取獎勵。

延遲與頻寬限制會影響分散式工作負載。Running computations across geographically dispersed locations can cause delays 相較於單一資料中心中共同部署的硬體會產生延遲。節點之間的網路頻寬限制了適合進行分散式處理的工作負載類型。需要頻繁節點間通訊的緊密耦合平行運算,會面臨效能下降的問題。

服務品質的可變性為生產環境應用帶來不確定性。與具有可預測效能的受管雲端環境不同,異質性的硬體資源池會產生不一致的結果。一次訓練執行可能依據資源可用性,被分配到企業級的 H100,或是消費級的 RTX 顯示卡。應用程式開發者必須針對這種變動性進行設計,或實作過濾機制,將作業限制在特定的硬體等級上執行。

經濟上的永續性需要在供給成長與需求擴張之間取得平衡。若可用運算能力快速增加,但需求成長未能跟上,將壓低代幣價格並降低供應者的獲利能力。協議必須謹慎管理代幣發行,避免通膨速度超過實際效用的成長。Sustainable tokenomics requires demand growth to outpace supply increases。

代幣價值壓縮對長期參與者構成風險。隨著新供應者為了獎勵加入網路,競爭加劇會拉低每個節點的收益。早期參與者雖享有較高的初始回報,但報酬可能會隨時間減少。若代幣升值無法抵銷這種稀釋效應,供應者流失將增加,進而損害網路穩定性。

市場波動為參與者帶來財務風險。供應者的獎勵以原生代幣計價,而其價值會波動。硬體營運者可能基於代幣價格將保持穩定的預期而投資 GPU 資本,卻在價格下跌時面臨虧損。避險機制與穩定幣支付選項可以減輕波動風險,但也增加了系統複雜度。

圍繞代幣分類的監管不確定性帶來合規挑戰。各司法管轄區的證券監管機構正在評估運算代幣是否構成須註冊的證券。模糊的法律地位限制了機構參與,並為協議開發者帶來潛在責任風險。Infrastructure tokenization faces regulation uncertainties,因此相較於傳統金融結構,其採用程度受限。

資料保護相關法規對分散式網路提出必須遵循的要求。處理歐盟公民的資料需要符合 GDPR,包括資料最小化與刪除權。醫療應用必須滿足 HIPAA 要求。金融應用則面臨反洗錢義務。當資料在多個司法管轄區與獨立營運者之間流動時,去中心化網路會讓合規流程更加複雜。

硬體貢獻可能依其安排方式而引發監管審查。部分司法管轄區可能將特定的供應者關係,歸類為證券發行或受監管的金融商品。基礎設施提供與投資契約之間的界線,在許多法律框架中仍不清晰。

來自超大規模雲端供應商的競爭持續加劇。大型供應商投入數十億美元於新資料中心容量與客製 AI 加速器。AWS、Microsoft 和 Google 在 2024 年的資本支出增加了 36%,主要用於 AI 基礎設施。這些資本雄厚的既有業者,可以壓低價格或將運算與其他服務綑綁銷售,以維持市占率。

網路碎片化可能限制可組合性。多個互相競爭的協議,會形成計算資源無法在網路間輕易轉移的孤島生態系。API、驗證機制或代幣標準缺乏標準化,將降低效率並提高開發者的轉移成本。

早期採用風險會影響尚未建立可信紀錄的協議。新網路在同時吸引硬體供應者與運算需求方時,面臨「先有雞還是先有蛋」的問題。協議可能無法達到可持續營運所需的臨界規模。若網路崩潰或未能取得採用,代幣投資人將面臨全部損失的風險。

智慧合約或協調層中的安全性弱點,可能造成資金竊取或網路中斷。Decentralized networks face security challenges,因此需要嚴謹的智慧合約稽核與漏洞懸賞計畫。能掏空金庫或實現重複支付攻擊的漏洞,會損害信任與網路價值。

未來道路與觀察重點

追蹤關鍵指標與發展狀況,有助於洞察代幣化運算網路的成熟度與成長軌跡。

網路成長指標包括活躍運算節點數量、地理分布、多樣化硬體類型,以及以運算能力或 GPU 當量衡量的總可用容量。這些指標的擴張意味著供給增加與網路韌性提升。io.net accumulated over 300,000 verified GPUs,透過整合多元來源展現出在協議有效協調分散資源時的快速擴展潛力。

使用情況指標可揭示對分散式運算的實際需求。活躍運算作業、實際提供的總處理小時數,以及工作負載類型的組成,可以顯示網路是否在服務超越投機行為的真實應用。Akash witnessed notable surge in quarterly active leases,在擴大 GPU 支援後,顯示市場對分散式雲端替代方案的需求。

代幣市值與完全稀釋估值提供市場對協議價值的評估。將估值與實際收入或運算吞吐量比較,可看出代幣是已將未來成長預期反映在價格上,還是主要反映當前效用。Bittensor 的 TAO 代幣在 2024 年 3 月熱潮高峰時曾達到 750 美元,說明在真實採用之外仍存在投機興趣。

與 AI 公司及企業採用者的合作關係,是主流認可的訊號。當既有的 AI 實驗室、模型開發者或生產環境應用,將工作負載部署於分散式網路時,代表分散式基礎設施已能滿足真實世界的需求。Toyota and NTT announced a $3.3 billion investment in a Mobility AI Platform using edge computing,顯示企業對分散式架構的承諾。

協議升級與功能新增則反映持續開發的動能。整合新型 GPU、改進編排系統、增強驗證機制或治理機制,皆顯示正持續迭代以打造更完善的基礎設施。Bittensor's Dynamic TAO upgrade in 2025,將更多獎勵轉向高表現子網路,展現了具適應性的代幣經濟設計。

監管發展將形塑營運環境。若對基礎設施代幣給予有利的分類,或提供明確的合規指引,將降低法律不確定性並促進更廣泛的機構參與。相反地,若監管過於嚴苛,則可能限制特定司法管轄區內的成長。

協議間的競爭態勢將決定市場結構。運算基礎設施領域可能圍繞少數具有強大網路效應的主導網路而趨於集中,也可能維持碎片化狀態,由多個專門協議服務不同利基市場。互操作性標準則可促成跨網路協調,提升整體生態系效率。

結合中心化與去中心化元素的混合模型可能逐漸浮現。企業或許會使用傳統雲端作為基礎容量,並在需求高峰時「溢出」至分散式網路。此模式在保有受管服務可預測性的同時,也能於高峰期間透過分散式替代方案節省成本。

產業參與者共同營運分散式基礎設施的聯盟型網路也可能出現。AI 公司、雲端供應商、硬體製造商或學術機構,可能建立共享網路,以降低個別資本需求,同時維持去中心化治理。此模式可加速風險較趨保守組織的採用意願。

隨著協議針對特定使用情境優化,垂直專精趨勢看來相當可能。有些網路可能專注於 AI 訓練,其他則專精於推論,或是邊緣運算、算圖或科學計算。相較於通用型方案,專門化基礎設施能更好地滿足特定工作負載的需求。

與既有 AI 工具與框架的整合將是關鍵。與主流機器學習函式庫、編排系統與部署流程之間的無縫相容,能降低開發者的導入門檻。io.net supports Ray-native orchestration,反映出開發者更偏好標準化工作流程,而非為特定協議量身打造的客製實作。

永續性考量可能越來越影響協議設計。對節點營運者提供可再生能源誘因、採用高能效共識機制,或整合碳權機制,都可成為吸引重視環境議題使用者的差異化要素。隨著 AI 能源消耗受到關注,分散式網路可能將效率作為競爭優勢來定位。

Media coverageand crypto community attention serve as leading indicators of mainstream awareness. Increased discussion of specific protocols, rising search interest, or growing social media following often precedes broader adoption and token price appreciation. However, hype cycles can create misleading signals disconnected from fundamental growth.

結論

實體基礎設施金融(Physical Infrastructure Finance)代表加密貨幣演化為協調真實世界運算資源的新階段。透過將運算能力代幣化,PinFi 協議創造出市場,讓閒置的 GPU 成為生產性資產,透過 AI 工作負載、邊緣運算與各類專用基礎設施需求來產生收益。

AI 對算力近乎無止境的需求,與加密系統透過經濟誘因協調分散式系統的能力交會,形成強大的價值主張。GPU shortages affecting over 50% of generative AI companies 顯示基礎設施瓶頸的嚴重程度。Decentralized compute markets growing from $9 billion in 2024 to a projected $100 billion by 2032 則反映市場已開始認知到分散式模式能夠釋放潛在供給。

像 Bittensor、Render、Akash 和 io.net 等協議,展示了針對同一項根本挑戰的多種路徑:如何透過無許可、以區塊鏈為基礎的協調機制,高效地撮合運算供給與需求。每個網路都在代幣經濟模型、驗證機制與目標應用場景上進行不同實驗,並共同構成一個更廣泛的生態系,持續探索去中心化基礎設施的設計空間。

其影響超越加密領域,延伸至 AI 產業與更廣義的運算基礎設施。GPU 資源的民主化存取降低了 AI 創新的門檻。減少對集中化雲端寡頭的依賴,帶來新的競爭動態,可能改善價格與可近性。隨著代幣開始代表具生產力的基礎設施所有權,而非純粹的投機工具,新型資產類別也隨之出現。

仍然存在重大挑戰。技術可靠性、驗證機制、經濟可持續性、監管不確定性,以及來自資本雄厚既有業者的競爭,都構成風險。並非所有協議都能存活,許多代幣的估值也可能遠高於其實際效用。但推動 PinFi 的核心洞見看來相當合理:全球有大量運算能力處於閒置狀態,AI 基礎設施的需求卻極其龐大,而區塊鏈式的協調可以將這錯配的供給與需求曲線重新對接。

隨著 AI 需求持續爆炸成長,支撐這項技術的基礎設施層將變得愈發關鍵。這些基礎設施究竟會繼續集中在少數中心化供應商手中,還是會演化為透過加密經濟誘因協調的分散式所有權模式,可能將決定未來十年 AI 發展的競爭格局。

未來的基礎設施金融,可能會更像是由全球分散硬體所組成的代幣化網路,而非傳統的專案融資結構;任何擁有 GPU 的人都能成為基礎設施提供者,存取則只需要支付市場價格,無須任何額外許可。這代表對運算資源「所有權、營運模式與貨幣化方式」的根本性再想像——在這種架構下,加密協議透過解決物理世界的具體問題,展現出超越金融投機之外的實際效用。