The artificial intelligence industry faces a critical infrastructure bottleneck. Training large language models requires massive computational resources, edge devices proliferate at exponential rates, and GPU scarcity has become the defining constraint of the AI era. Meanwhile, traditional cloud providers struggle to meet surging demand while maintaining their monopolistic grip on access and pricing.

Over 50% of generative AI companies report GPU shortages as a major obstacle to scaling their operations. AI computing power is expected to increase by roughly 60 times by the end of 2025 compared to Q1 2023. This computational arms race has created an opening for crypto protocols to propose a decentralized alternative.

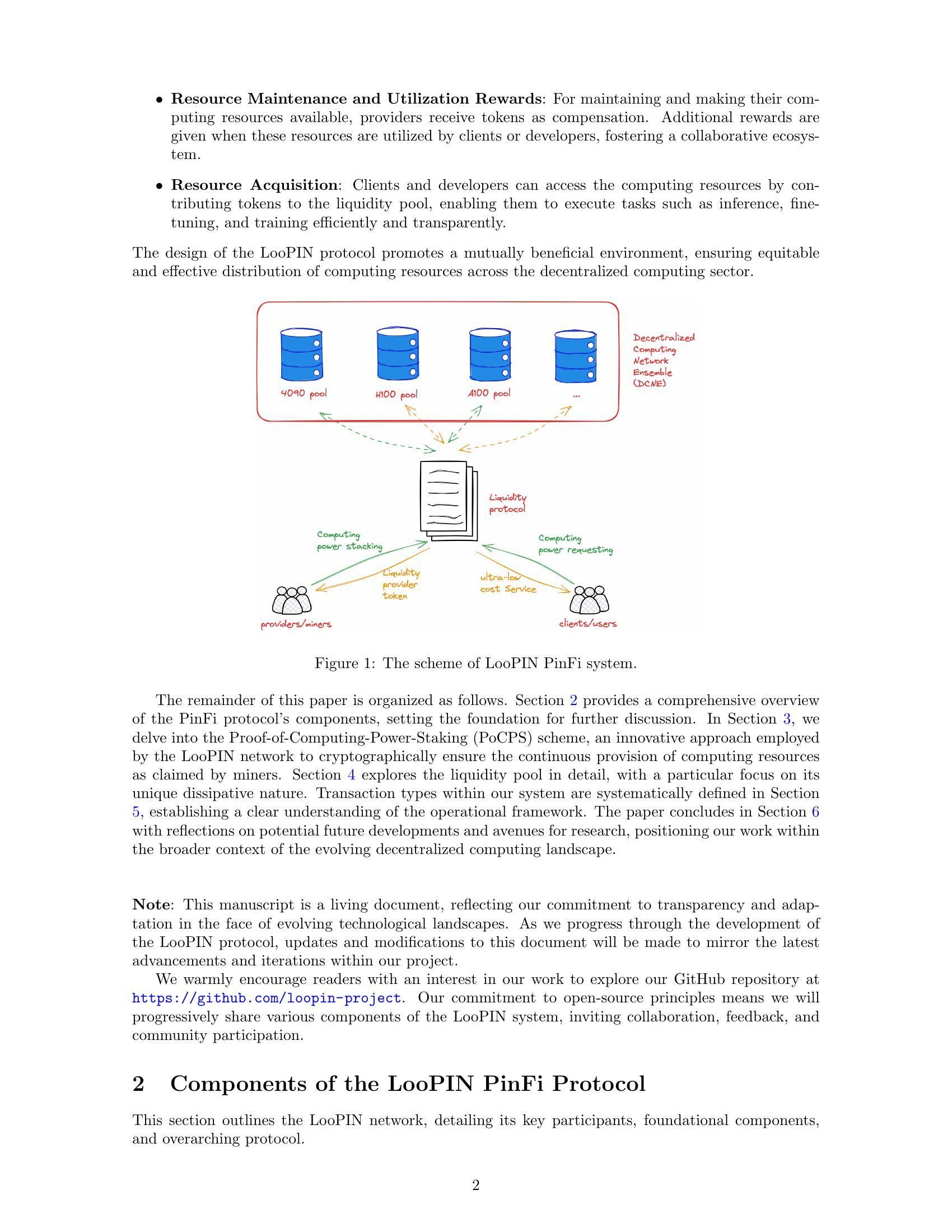

Enter Physical Infrastructure Finance, or PinFi. This emerging framework treats compute capacity as a tokenized asset that can be traded, staked and monetized through blockchain-based networks. Rather than relying on centralized data centers, PinFi protocols aggregate unused GPU power from independent operators, gaming rigs, mining farms and edge devices into distributed marketplaces accessible to AI developers worldwide.

Below we explore how real compute power is being transformed into crypto-economic infrastructure, understanding the mechanics of tokenized compute networks, the economic models that incentivize participation, the architecture enabling verification and settlement, and the implications for both the crypto and AI industries.

Why PinFi Now? The Macro & Technical Drivers

The compute bottleneck confronting the AI industry stems from fundamental supply constraints. Nvidia allocated nearly 60% of its chip production to enterprise AI clients in Q1 2025, leaving many users scrambling for access. The global AI chip market reached $123.16 billion in 2024 and is projected to hit $311.58 billion by 2029, reflecting explosive demand that far outpaces manufacturing capacity.

GPU scarcity manifests in multiple ways. Traditional cloud providers maintain waiting lists for premium GPU instances. AWS charges $98.32 per hour for an 8-GPU H100 instance, pricing that places advanced AI capabilities out of reach for many developers and startups. Hardware prices remain elevated due to supply constraints, with HBM3 pricing rising 20-30% year-over-year.

The concentration of computing power within a handful of large cloud providers creates additional friction. By 2025, analysts say over 50% of enterprise workloads will run in the cloud, yet access remains gated by contracts, geographic limitations and know-your-customer requirements. This centralization limits innovation and creates single points of failure for critical infrastructure.

Meanwhile, vast amounts of computing capacity sit idle. Gaming rigs remain unused during work hours. Crypto miners seek new revenue streams as mining economics shift. Data centers maintain excess capacity during off-peak periods. The decentralized compute market has grown from $9 billion in 2024 with projections reaching $100 billion by 2032, signaling market recognition that distributed models can capture this latent supply.

The intersection of blockchain technology and physical infrastructure has matured through decentralized physical infrastructure networks, or DePIN. DePIN protocols use token incentives to coordinate the deployment and operation of real-world infrastructure. Messari identified DePIN's total addressable market at $2.2 trillion, potentially reaching $3.5 trillion by 2028.

PinFi represents the application of DePIN principles specifically to compute infrastructure. It treats computational resources as tokenizable assets that generate yield through productive use. This framework transforms computing from a service rented from centralized providers into a commodity traded in open, permissionless markets.

What is PinFi & Tokenised Compute?

Physical Infrastructure Finance defines a model where physical computational assets are represented as digital tokens on blockchains, enabling decentralized ownership, operation and monetization. Unlike traditional decentralized finance protocols that deal with purely digital assets, PinFi creates bridges between off-chain physical resources and on-chain economic systems.

Academic research defines tokenization as "the process of converting rights, a unit of asset ownership, debt, or even a physical asset into a digital token on a blockchain." For compute resources, this means individual GPUs, server clusters or edge devices become represented by tokens that track their capacity, availability and usage.

PinFi differs fundamentally from standard infrastructure finance or typical DeFi protocols. Traditional infrastructure finance involves long-term debt or equity investments in large capital projects. DeFi protocols primarily facilitate trading, lending or yield generation on crypto-native assets. PinFi sits at the intersection, applying crypto-economic incentives to coordinate real-world computational resources while maintaining on-chain settlement and governance.

Several protocols exemplify the PinFi model. Bittensor operates as a decentralized AI network where participants contribute machine learning models and computational resources to specialized subnets focused on specific tasks. The TAO token incentivizes contributions based on the informational value provided to the collective intelligence of the network. With over 7,000 miners contributing compute, Bittensor creates markets for AI inference and model training.

Render Network aggregates idle GPUs globally for distributed GPU rendering tasks. Originally focused on 3D rendering for artists and content creators, Render has expanded into AI computing workloads. Its RNDR token serves as payment for rendering jobs while rewarding GPU providers for contributed capacity.

Akash Network operates as a decentralized cloud marketplace that utilizes unused data center capacity. Through a reverse auction system, compute deployers specify their requirements and providers bid to fulfill requests. The AKT token facilitates governance, staking and settlements across the network. Akash witnessed notable surge in quarterly active leases after expanding its focus to include GPUs alongside traditional CPU resources.

io.net has aggregated over 300,000 verified GPUs by integrating resources from independent data centers, crypto miners and other DePIN networks including Render and Filecoin. The platform focuses specifically on AI and machine learning workloads, offering developers the ability to deploy GPU clusters across 130 countries within minutes.

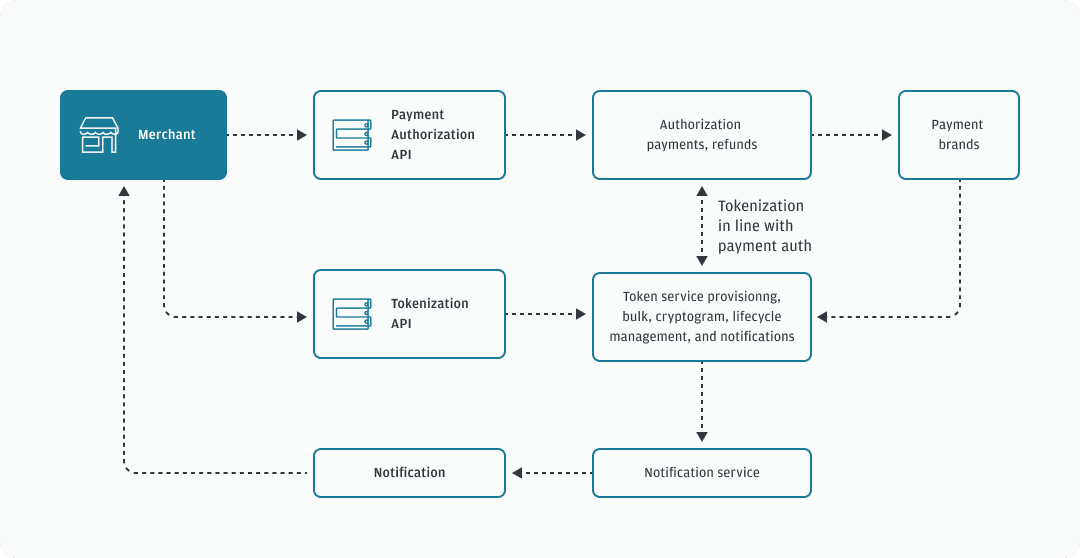

The mechanics of tokenized compute follow a consistent pattern across these protocols. Compute providers register their hardware with the network, undergoing verification processes to confirm capacity and capabilities. Smart contracts manage the relationship between supply and demand, routing compute jobs to available nodes based on requirements, pricing and geographic constraints. Token rewards incentivize both hardware provision and quality service delivery.

Value generation occurs through actual usage rather than speculation. When an AI developer trains a model using distributed GPU resources, payment flows to the providers whose hardware performed the work. The computational power becomes a productive asset generating yield, similar to how proof-of-stake validators earn rewards for securing networks. This creates sustainable economics where token value correlates with network utility.

Infrastructure Architecture: Nodes, Marketplaces, Settlement

The architecture enabling tokenized compute requires coordination across multiple layers. At its foundation sits a network of independent compute providers who deploy hardware, register with protocols and make capacity available for rent. These providers range from individuals with gaming PCs to professional data center operators to cryptocurrency mining operations seeking additional revenue streams.

Node provisioning begins when a compute provider connects hardware to the network. Protocols like io.net support diverse GPU types, from consumer-grade NVIDIA RTX 4090s to enterprise H100s and A100s. The provider installs client software that exposes capacity to the network's orchestration layer while maintaining security boundaries that prevent unauthorized access.

Verification mechanisms ensure that advertised capacity matches actual capabilities. Some protocols employ cryptographic proofs of compute, where nodes must demonstrate they performed specific calculations correctly. Bittensor uses its Yuma Consensus mechanism, where validators evaluate the quality of miners' machine learning outputs and assign scores that determine reward distribution. Nodes providing low-quality results or attempting to cheat receive reduced compensation or face slashing of staked tokens.

Latency benchmarking helps match workloads to appropriate hardware. AI inference requires different performance characteristics than model training or 3D rendering. Geographic location affects latency for edge computing applications where processing must occur near data sources. The edge computing market reached $23.65 billion in 2024 and is expected to hit $327.79 billion by 2033, driven by demand for localized processing.

The marketplace layer connects compute demand with supply. When developers need GPU resources, they specify requirements including processing power, memory, duration and maximum price. Akash employs a reverse auction model where deployers set terms and providers bid to win contracts. Render uses dynamic pricing algorithms that adjust rates based on network utilization and market conditions.

Job routing algorithms optimize placement of compute tasks across available nodes. Factors considered include hardware specifications, current utilization, geographic proximity, historical performance and price. io.net's orchestration layer handles containerized workflows and supports Ray-native orchestration for distributed machine learning workloads.

Settlement occurs on-chain through smart contracts that escrow payments and release funds upon verified completion of work. This trustless settlement eliminates counterparty risk while enabling microtransactions for short-duration compute jobs. Protocols built on high-throughput blockchains like Solana can handle the transaction volume generated by thousands of simultaneous inference requests.

Staking mechanisms align incentives between participants. Compute providers often stake tokens to demonstrate commitment and expose collateral that can be slashed for poor performance. Validators in Bittensor stake TAO tokens to gain influence in scoring miners and earn portions of block rewards. Token holders can delegate stake to validators they trust, similar to proof-of-stake consensus mechanisms.

Governance allows token holders to vote on protocol parameters including reward distribution, fee structures and network upgrades. Decentralized governance ensures that no central authority can unilaterally change rules or restrict access, maintaining the permissionless nature that differentiates these networks from traditional cloud providers.

The architecture contrasts sharply with centralized cloud computing. Major providers own their infrastructure, set prices without market competition, require accounts and compliance checks, and maintain control over access and censorship. PinFi protocols distribute ownership across thousands of independent operators, enable transparent market-based pricing, operate permissionlessly and resist censorship through decentralization.

Tokenomics & Incentive Models

Token economics provide the incentive structure that coordinates distributed compute networks. Native tokens serve multiple functions including payment for services, rewards for resource provision, governance rights and staking requirements for network participation.

Issuance mechanisms determine how tokens enter circulation. Bittensor follows Bitcoin's model with a capped supply of 21 million TAO tokens and periodic halvings that reduce issuance over time. Currently 7,200 TAO are minted daily, split between miners who contribute computational resources and validators who ensure network quality. This creates scarcity similar to Bitcoin while directing inflation toward productive infrastructure.

Other protocols issue tokens based on network usage. When compute jobs execute, newly minted tokens flow to providers proportional to the resources they supplied. This direct linkage between value creation and token issuance ensures that inflation rewards actual productivity rather than passive token holding.

Staking creates skin in the game for network participants. Compute providers stake tokens to register nodes and demonstrate commitment. Poor performance or attempted fraud results in slashing, where staked tokens are destroyed or redistributed to affected parties. This economic penalty incentivizes reliable service delivery and honest behavior.

Validators stake larger amounts to gain influence in quality assessment and governance decisions. In Bittensor's model, validators evaluate miners' outputs and submit weight matrices indicating which nodes provided valuable contributions. The Yuma Consensus aggregates these assessments weighted by validator stake to determine final reward distribution.

The supply-demand dynamics for compute tokens operate on two levels. On the supply side, more nodes joining the network increase available computational capacity. Token rewards must be sufficient to compensate for hardware costs, electricity and opportunity costs versus alternative uses of the equipment. As token prices rise, provisioning compute becomes more profitable, attracting additional supply.

On the demand side, token price reflects the value users place on network access. As AI applications proliferate and compute scarcity intensifies, willingness to pay for decentralized resources increases. The AI hardware market is expected to grow from $66.8 billion in 2025 to $296.3 billion by 2034, creating sustained demand for alternative compute sources.

Token value appreciation benefits all participants. Hardware providers earn more for the same computational output. Early node operators gain from appreciation of accumulated rewards. Developers benefit from a decentralized alternative to expensive centralized providers. Token holders who stake or provide liquidity capture fees from network activity.

Risk models address potential failure modes. Node downtime reduces earnings as jobs route to available alternatives. Geographic concentration creates latency issues for edge applications requiring local processing. Network effects favor larger protocols with more diverse hardware and geographic distribution.

Token inflation must balance attracting new supply with maintaining value for existing holders. Research on decentralized infrastructure protocols notes that sustainable tokenomics requires demand growth to outpace supply increases. Protocols implement burning mechanisms, where tokens used for payments are permanently removed from circulation, creating deflationary pressure that offsets inflationary issuance.

Fee structures vary across networks. Some charge users directly in native tokens. Others accept stablecoins or wrapped versions of major cryptocurrencies, with protocol tokens primarily serving governance and staking functions. Hybrid models use tokens for network access while settling compute payments in stable assets to reduce volatility risk.

The design space for incentive models continues evolving as protocols experiment with different approaches to balancing stakeholder interests and sustaining long-term growth.

AI, Edge, and Real-World Infrastructure

Tokenized compute networks enable applications that leverage distributed hardware for AI workloads, edge processing and specialized infrastructure needs. The diversity of use cases demonstrates how decentralized models can address bottlenecks across the computational stack.

Distributed AI model training represents a primary use case. Training large language models or computer vision systems requires massive parallel computation across multiple GPUs. Traditional approaches concentrate this training in centralized data centers owned by major cloud providers. Decentralized networks allow training to occur across geographically distributed nodes, each contributing computational work coordinated through blockchain-based orchestration.

Bittensor's subnet architecture enables specialized AI markets focused on specific tasks like text generation, image synthesis or data scraping. Miners compete to provide high-quality outputs for their chosen domains, with validators assessing performance and distributing rewards accordingly. This creates competitive markets where the best models and most efficient implementations naturally emerge through economic selection.

Edge computing workloads benefit particularly from decentralized infrastructure. The global edge computing market was valued at $23.65 billion in 2024, driven by applications requiring low latency and local processing. IoT devices generating continuous sensor data need immediate analysis without round-trip delays to distant data centers. Autonomous vehicles require split-second decision making that cannot tolerate network latency.

Decentralized compute networks can place processing capacity physically close to data sources. A factory deploying industrial IoT sensors can rent edge nodes within the same city or region rather than relying on centralized clouds hundreds of miles away. Industrial IoT applications accounted for the largest market share in edge computing in 2024, reflecting the critical nature of localized processing for manufacturing and logistics.

Content rendering and creative workflows consume significant GPU resources. Artists rendering 3D scenes, animators producing films, and game developers compiling assets all require intensive parallel processing. Render Network specializes in distributed GPU rendering, connecting creators with idle GPU capacity worldwide. This marketplace model reduces rendering costs while providing revenue streams for GPU owners during off-peak periods.

Scientific computing and research applications face budget constraints when accessing expensive cloud resources. Academic institutions, independent researchers and smaller organizations can leverage decentralized networks to run simulations, analyze datasets or train specialized models. The permissionless nature means researchers in any geography can access computational resources without institutional cloud accounts or credit checks.

Gaming and metaverse platforms require rendering and physics calculations for immersive experiences. As virtual worlds grow in complexity, the computational demands for maintaining persistent environments and supporting thousands of simultaneous users increase. Edge-distributed compute nodes can provide local processing for regional player populations, reducing latency while distributing infrastructure costs across token-incentivized providers.

AI inference at scale requires continuous GPU access for serving predictions from trained models. A chatbot serving millions of queries, an image generation service processing user prompts, or a recommendation engine analyzing user behavior all need always-available compute. Decentralized networks provide redundancy and geographic distribution that enhances reliability compared to single-provider dependencies.

Geographic zones underserved by major cloud providers present opportunities for PinFi protocols. Regions with limited data center presence face higher latency and costs when accessing centralized infrastructure. Local hardware providers in these areas can offer compute capacity tailored to regional demand, earning token rewards while improving local access to AI capabilities.

Data sovereignty requirements increasingly mandate that certain workloads process data within specific jurisdictions. Regulations like the EU Data Act require sensitive information to be processed locally, encouraging deployment of edge infrastructure that complies with residency rules. Decentralized networks naturally support jurisdiction-specific node deployment while maintaining global coordination through blockchain-based settlement.

Why It Matters: Implications for Crypto & Infrastructure

The emergence of PinFi represents crypto's expansion beyond purely financial applications into coordination of real-world infrastructure. This shift carries implications for both the crypto ecosystem and broader computational industries.

Crypto protocols demonstrate utility beyond speculation when they solve tangible infrastructure problems. DePIN and PinFi create economic systems that coordinate physical resources, proving that blockchain-based incentives can bootstrap real-world networks. The DePIN sector's total addressable market is currently around $2.2 trillion and could reach $3.5 trillion by 2028, representing roughly three times the total crypto market capitalization today.

Democratization of compute access addresses a fundamental asymmetry in AI development. Currently, advanced AI capabilities remain largely concentrated among well-funded technology companies that can afford massive GPU clusters. Startups, researchers and developers in resource-constrained environments face barriers to participating in AI innovation. Decentralized compute networks lower these barriers by providing permissionless access to distributed hardware at market-driven prices.

The creation of new asset classes expands the crypto investment landscape. Compute-capacity tokens represent ownership in productive infrastructure that generates revenue through real-world usage. This differs from purely speculative assets or governance tokens without clear value capture mechanisms. Token holders essentially own shares of a decentralized cloud provider, with value tied to demand for computational services.

Traditional infrastructure monopolies face potential disruption. Centralized cloud providers including AWS, Microsoft Azure and Google Cloud maintain oligopolistic control over compute markets, setting prices without direct competition. Decentralized alternatives introduce market dynamics where thousands of independent providers compete, potentially driving down costs while improving accessibility.

The AI industry benefits from reduced dependence on centralized infrastructure. Currently, AI development clusters around major cloud providers, creating single points of failure and concentration risk. Over 50% of generative AI companies report GPU shortages as major obstacles. Distributed networks provide alternative capacity that can absorb demand overflow and offer redundancy against supply chain disruptions.

Energy efficiency improvements may emerge from better capacity utilization. Gaming rigs sitting idle consume standby power without productive output. Mining operations with excess capacity seek additional revenue streams. Distributed networks put idle GPUs to productive use, improving overall resource efficiency in the computational ecosystem.

Censorship resistance becomes relevant for AI applications. Centralized cloud providers can deny service to specific users, applications or entire geographic regions. Decentralized networks operate permissionlessly, enabling AI development and deployment without requiring approval from gatekeepers. This matters particularly for controversial applications or users in restrictive jurisdictions.

Data privacy architectures improve through local processing. Edge computing keeps sensitive data near its source rather than transmitting to distant data centers. Decentralized networks can implement privacy-preserving techniques like federated learning, where models train on distributed data without centralizing raw information.

Market efficiency increases through transparent price discovery. Traditional cloud pricing remains opaque, with complex fee structures and negotiated enterprise contracts. Decentralized marketplaces establish clear spot prices for compute resources, enabling developers to optimize costs and providers to maximize revenue through competitive dynamics.

Long-term relevance stems from sustained demand drivers. AI workloads will continue growing as applications proliferate. The AI hardware market is expected to grow from $66.8 billion in 2025 to $296.3 billion by 2034. Compute will remain a fundamental constraint, ensuring ongoing demand for alternative infrastructure models.

Network effects favor early protocols that achieve critical mass. As more hardware providers join, diversity of available resources increases. Geographic distribution improves, reducing latency for edge applications. Larger networks attract more developers, creating virtuous cycles of growth. First-movers in specific domains may establish lasting advantages.

Challenges & Risks

Despite promising applications, tokenized compute networks face significant technical, economic and regulatory challenges that could constrain growth or limit adoption.

Technical reliability remains a primary concern. Centralized cloud providers offer service level agreements guaranteeing uptime and performance. Distributed networks coordinate hardware from independent operators with varying levels of professionalism and infrastructure quality. Node failures, network outages or maintenance windows create availability gaps that must be managed through redundancy and routing algorithms.

Verification of actual work performed presents ongoing challenges. Ensuring that nodes honestly execute computations rather than returning false results requires sophisticated proof systems. Cryptographic proofs of compute add overhead but remain necessary to prevent fraud. Imperfect verification mechanisms enable potential attacks where malicious nodes claim rewards without providing promised services.

Latency and bandwidth limitations affect distributed workloads. Running computations across geographically dispersed locations can cause delays compared to co-located hardware in single data centers. Network bandwidth between nodes constrains the types of workloads suitable for distributed processing. Tightly coupled parallel computations requiring frequent inter-node communication face performance degradation.

Quality of service variability creates uncertainty for production applications. Unlike managed cloud environments with predictable performance, heterogeneous hardware pools produce inconsistent results. A training run might execute on enterprise-grade H100s or consumer RTX cards depending on availability. Application developers must design for this variability or implement filtering that restricts jobs to specific hardware tiers.

Economic sustainability requires balancing supply growth with demand expansion. Rapid increases in available compute capacity without corresponding demand growth would depress token prices and reduce provider profitability. Protocols must carefully manage token issuance to avoid inflation that outpaces utility growth. Sustainable tokenomics requires demand growth to outpace supply increases.

Token value compression poses risks for long-term participants. As new providers join networks seeking rewards, increased competition drives down earnings per node. Early participants benefiting from higher initial rewards may see returns diminish over time. If token appreciation fails to offset this dilution, provider churn increases and network stability suffers.

Market volatility introduces financial risk for participants. Providers earn rewards in native tokens whose value fluctuates. A hardware operator may commit capital to GPU purchases expecting token prices to remain stable, only to face losses if prices decline. Hedging mechanisms and stablecoin payment options can mitigate volatility but add complexity.

Regulatory uncertainty around token classifications creates compliance challenges. Securities regulators in various jurisdictions evaluate whether compute tokens constitute securities subject to registration requirements. Ambiguous legal status restricts institutional participation and creates liability risks for protocol developers. Infrastructure tokenization faces regulation uncertainties that have limited adoption compared to traditional finance structures.

Data protection regulations impose requirements that distributed networks must navigate. Processing European citizens' data requires GDPR compliance including data minimization and rights to deletion. Healthcare applications must satisfy HIPAA requirements. Financial applications face anti-money laundering obligations. Decentralized networks complicates compliance when data moves across multiple jurisdictions and independent operators.

Hardware contributions may trigger regulatory scrutiny depending on how arrangements are structured. Jurisdictions might classify certain provider relationships as securities offerings or regulated financial products. The line between infrastructure provision and investment contracts remains unclear in many legal frameworks.

Competition from hyperscale cloud providers continues intensifying. Major providers invest billions in new data center capacity and custom AI accelerators. AWS, Microsoft, and Google spent 36% more on capital expenditures in 2024, largely for AI infrastructure. These well-capitalized incumbents can undercut pricing or bundle compute with other services to maintain market share.

Network fragmentation could limit composability. Multiple competing protocols create siloed ecosystems where compute resources cannot easily transfer between networks. Lack of standardization in APIs, verification mechanisms or token standards reduces efficiency and increases switching costs for developers.

Early adopter risk affects protocols without proven track records. New networks face chicken-and-egg problems attracting both hardware providers and compute buyers simultaneously. Protocols may fail to achieve critical mass needed for sustainable operations. Token investors face total loss risk if networks collapse or fail to gain adoption.

Security vulnerabilities in smart contracts or coordination layers could enable theft of funds or network disruption. Decentralized networks face security challenges requiring careful smart contract auditing and bug bounty programs. Exploits that drain treasuries or enable double-payment attacks damage trust and network value.

The Road Ahead & What to Watch

Tracking key metrics and developments provides insight into the maturation and growth trajectory of tokenized compute networks.

Network growth indicators include the number of active compute nodes, geographic distribution, hardware diversity and total available capacity measured in compute power or GPU equivalents. Expansion in these metrics signals increasing supply and network resilience. io.net accumulated over 300,000 verified GPUs by integrating multiple sources, demonstrating rapid scaling potential when protocols effectively coordinate disparate resources.

Usage metrics reveal actual demand for decentralized compute. Active compute jobs, total processing hours delivered, and the mix of workload types show whether networks serve real applications beyond speculation. Akash witnessed notable surge in quarterly active leases after expanding GPU support, indicating market appetite for decentralized alternatives to traditional clouds.

Token market capitalization and fully diluted valuations provide market assessments of protocol value. Comparing valuations to actual revenue or compute throughput reveals whether tokens price in future growth expectations or reflect current utility. Bittensor's TAO token reached $750 during peak hype in March 2024, illustrating speculative interest alongside genuine adoption.

Partnerships with AI companies and enterprise adopters signal mainstream validation. When established AI labs, model developers or production applications deploy workloads on decentralized networks, it demonstrates that distributed infrastructure meets real-world requirements. Toyota and NTT announced a $3.3 billion investment in a Mobility AI Platform using edge computing, showing corporate commitment to distributed architectures.

Protocol upgrades and feature additions indicate continued development momentum. Integration of new GPU types, improved orchestration systems, enhanced verification mechanisms or governance improvements show active iteration toward better infrastructure. Bittensor's Dynamic TAO upgrade in 2025 shifted more rewards to high-performing subnets, demonstrating adaptive tokenomics.

Regulatory developments shape the operating environment. Favorable classification of infrastructure tokens or clear guidance on compliance requirements would reduce legal uncertainty and enable broader institutional participation. Conversely, restrictive regulations could limit growth in specific jurisdictions.

Competitive dynamics between protocols determine market structure. The compute infrastructure space may consolidate around a few dominant networks achieving strong network effects, or remain fragmented with specialized protocols serving different niches. Interoperability standards could enable cross-network coordination, improving overall ecosystem efficiency.

Hybrid models combining centralized and decentralized elements may emerge. Enterprises might use traditional clouds for baseline capacity while bursting to decentralized networks during peak demand. This approach provides predictability of managed services while capturing cost savings from distributed alternatives during overflow periods.

Consortium networks could form where industry participants jointly operate decentralized infrastructure. AI companies, cloud providers, hardware manufacturers or academic institutions might establish shared networks that reduce individual capital requirements while maintaining decentralized governance. This model could accelerate adoption among risk-averse organizations.

Vertical specialization seems likely as protocols optimize for specific use cases. Some networks may focus exclusively on AI training, others on inference, some on edge computing, others on rendering or scientific computation. Specialized infrastructure better serves particular workload requirements compared to general-purpose alternatives.

Integration with existing AI tooling and frameworks will prove critical. Seamless compatibility with popular machine learning libraries, orchestration systems and deployment pipelines reduces friction for developers. io.net supports Ray-native orchestration, recognizing that developers prefer standardized workflows over protocol-specific custom implementations.

Sustainability considerations may increasingly influence protocol design. Energy-efficient consensus mechanisms, renewable energy incentives for node operators, or carbon credit integration could differentiate protocols appealing to environmentally conscious users. As AI's energy consumption draws scrutiny, decentralized networks might position efficiency as a competitive advantage.

Media coverage and crypto community attention serve as leading indicators of mainstream awareness. Increased discussion of specific protocols, rising search interest, or growing social media following often precedes broader adoption and token price appreciation. However, hype cycles can create misleading signals disconnected from fundamental growth.

Conclusion

Physical Infrastructure Finance represents crypto's evolution into coordination of real-world computational resources. By tokenizing compute capacity, PinFi protocols create markets where idle GPUs become productive assets generating yield through AI workloads, edge processing and specialized infrastructure needs.

The convergence of AI's insatiable demand for computing power with crypto's ability to coordinate distributed systems through economic incentives creates a compelling value proposition. GPU shortages affecting over 50% of generative AI companies demonstrate the severity of infrastructure bottlenecks. Decentralized compute markets growing from $9 billion in 2024 to a projected $100 billion by 2032 signal market recognition that distributed models can capture latent supply.

Protocols like Bittensor, Render, Akash and io.net demonstrate varied approaches to the same fundamental challenge: efficiently matching compute supply with demand through permissionless, blockchain-based coordination. Each network experiments with different tokenomics, verification mechanisms and target applications, contributing to a broader ecosystem exploring the design space for decentralized infrastructure.

The implications extend beyond crypto into the AI industry and computational infrastructure more broadly. Democratized access to GPU resources lowers barriers for AI innovation. Reduced dependence on centralized cloud oligopolies introduces competitive dynamics that may improve pricing and accessibility. New asset classes emerge as tokens represent ownership in productive infrastructure rather than pure speculation.

Significant challenges remain. Technical reliability, verification mechanisms, economic sustainability, regulatory uncertainty and competition from well-capitalized incumbents all pose risks. Not every protocol will survive, and many tokens may prove overvalued relative to fundamental utility. But the core insight driving PinFi appears sound: vast computational capacity sits idle worldwide, massive demand exists for AI infrastructure, and blockchain-based coordination can match these mismatched supply and demand curves.

As AI demand continues exploding, the infrastructure layer powering this technology will prove increasingly critical. Whether that infrastructure remains concentrated among a few centralized providers or evolves toward distributed ownership models coordinated through crypto-economic incentives may define the competitive landscape of AI development for the next decade.

The infrastructure finance of the future may look less like traditional project finance and more like tokenized networks of globally distributed hardware, where anyone with a GPU can become infrastructure provider and where access requires no permission beyond market-rate payment. This represents a fundamental reimagining of how computational resources are owned, operated and monetized—one where crypto protocols demonstrate utility beyond financial speculation by solving tangible problems in the physical world.