Every second, hundreds of thousands of transactions flow through blockchain networks. Traders execute swaps on decentralized exchanges, users mint NFTs, validators secure proof-of-stake networks, and smart contracts settle automatically without intermediaries. The promise of Web3 is simple: decentralized systems that run continuously, transparently, and without single points of failure.

But behind this vision of autonomous code lies a remarkably complex infrastructure layer that few users ever see. Every transaction that touches a blockchain requires infrastructure to function. Someone operates the nodes that validate transactions, maintains the RPC endpoints that allow applications to read and write blockchain data, and runs the indexers that make on-chain information queryable.

When a DeFi protocol processes billions in daily volume or an NFT marketplace handles traffic spikes during major drops, professional DevOps teams ensure the infrastructure stays responsive, secure, and available.

The stakes for infrastructure reliability in crypto are extraordinarily high. A failed validator can result in slashed staking deposits. An overloaded RPC endpoint can prevent users from executing time-sensitive trades, leading to liquidations worth millions. A misconfigured indexer can serve stale data that breaks application logic. Unlike traditional web applications where downtime means frustrated users, infrastructure failures in crypto can mean direct financial losses for users and protocols alike.

As Web3 ecosystems mature and handle increasingly serious financial activity, the DevOps discipline within crypto has evolved from hobbyist node operators to sophisticated infrastructure teams managing multi-chain operations with enterprise-grade reliability. This evolution mirrors the broader professionalization of the crypto industry, where protocols handling billions in total value locked demand infrastructure operations that meet or exceed traditional financial technology standards.

This article examines how crypto DevOps actually works in practice. It explores the systems professional teams build and maintain, the tools they rely on, the challenges unique to decentralized infrastructure, and the operational practices that keep Web3 running smoothly around the clock. Understanding this hidden layer reveals how decentralization meets operational reality and why infrastructure expertise has become a strategic capability in the blockchain space.

What Is Crypto DevOps?

To understand crypto DevOps, it helps to start with traditional DevOps. In conventional software development, DevOps emerged as a discipline focused on bridging the gap between software development and IT operations. DevOps practitioners automate deployments, manage infrastructure as code, implement continuous integration and delivery pipelines, and ensure systems remain reliable under varying loads. The goal is reducing friction between writing code and running it reliably in production while maintaining rapid iteration cycles.

Traditional DevOps teams work with familiar components: web servers, databases, message queues, load balancers, and monitoring systems. They deploy applications to cloud platforms, scale resources dynamically based on traffic, and respond to incidents when services degrade. Infrastructure as code tools like Terraform allow them to define entire environments programmatically, making infrastructure reproducible and version-controlled.

Crypto DevOps extends these same principles into the world of decentralized networks, but with significant differences stemming from blockchain architecture. Rather than deploying centralized applications that a single team controls, crypto DevOps teams manage infrastructure that participates in peer-to-peer networks where consensus rules govern behavior.

They operate nodes that must synchronize with thousands of other nodes worldwide, maintain compatibility with rapidly evolving protocol upgrades, and ensure their infrastructure remains available when network conditions are unpredictable.

The core responsibilities of crypto DevOps teams include running and maintaining blockchain nodes that verify transactions and participate in network consensus. Full nodes download and validate the entire blockchain history, while validator nodes in proof-of-stake systems actively participate in block production and earn staking rewards. Archive nodes store complete historical state, enabling queries about any past blockchain state.

Managing RPC endpoints represents another crucial responsibility. Remote Procedure Call infrastructure allows decentralized applications to interact with blockchains without running full nodes themselves. When a user connects their wallet to a DeFi protocol, that application sends JSON-RPC requests to infrastructure querying the current state of smart contracts, checking token balances, and broadcasting signed transactions. Professional RPC infrastructure must handle thousands of requests per second reliably with low latency.

Operating indexers and APIs adds another layer. Raw blockchain data is append-only and optimized for consensus, not queries. Indexers watch the chain in real time, extract relevant data from transactions and smart contract events, and organize it into databases optimized for specific query patterns.

The Graph protocol, for instance, allows developers to define subgraphs that index specific contract events and expose them through GraphQL APIs. Teams running their own indexers must ensure they stay synchronized with the chain and serve accurate, up-to-date information.

Observability and monitoring form the backbone of reliable crypto operations. DevOps teams instrument their infrastructure comprehensively, tracking metrics like node synchronization status, peer connections, memory usage, disk I/O, request latency, and error rates. They configure alerts to detect degradations quickly and maintain dashboards showing real-time system health. In crypto, where networks never sleep and issues can cascade quickly, robust monitoring is not optional.

Essentially, crypto DevOps serves as the reliability layer of Web3. While smart contracts define what applications should do and consensus mechanisms ensure agreement on state transitions, DevOps infrastructure provides the practical capability for applications and users to interact with chains reliably. Without professional operations teams, even the most elegant protocol designs would struggle to deliver consistent user experiences.

The Core Infrastructure Stack

Understanding what crypto DevOps teams actually manage requires examining the infrastructure stack's technical components. Unlike traditional web applications with relatively standardized architectures, blockchain infrastructure involves specialized systems designed for decentralized networks.

At the foundation sit full nodes and validators. Full nodes are instances of blockchain client software that download, verify, and store the complete blockchain. Running a full node means independently validating every transaction and block according to consensus rules rather than trusting third parties.

Different blockchains have different node implementations. Ethereum has clients like Geth, Nethermind, and Besu. Solana uses the Solana Labs validator client. Bitcoin Core represents the reference implementation for Bitcoin.

Validators extend beyond passive verification to active consensus participation. In proof-of-stake systems, validators propose new blocks and attest to others' proposals, earning rewards for correct behavior and facing penalties for downtime or malicious actions. Running validators requires careful key management, high uptime guarantees, and often significant capital stake. The validator role brings operational requirements closer to running critical financial infrastructure than typical web services.

RPC nodes form the primary interface between applications and blockchains. These specialized nodes expose JSON-RPC endpoints that applications call to query blockchain state and submit transactions. An RPC node might handle requests to check an account's token balance, retrieve smart contract code, estimate transaction gas costs, or broadcast signed transactions to the network. Unlike validators, RPC nodes don't participate in consensus but must stay synchronized with the chain tip to serve current state. Teams often run multiple RPC nodes behind load balancers to handle traffic and provide redundancy.

Indexers represent crucial infrastructure for making blockchain data practically queryable. Searching for specific events in blockchain history by querying nodes directly would require scanning millions of blocks. Indexers solve this by watching chain activity continuously, extracting relevant data, and storing it in databases optimized for specific access patterns.

The Graph protocol pioneered decentralized indexing through subgraphs, where developers define which smart contract events to track and expose them via GraphQL. Other solutions like SubQuery, Covalent, and custom indexing services fulfill similar roles across different chains.

Load balancers and caching layers optimize infrastructure performance under real-world traffic. Geographic load balancing routes requests to the nearest RPC nodes, reducing latency. Caching frequently accessed data like token metadata or popular smart contract states reduces load on backend nodes. Some teams use Redis or Memcached to cache responses for queries that don't require absolute real-time accuracy, dramatically improving response times and reducing costs for redundant lookups.

Monitoring and alerting systems provide visibility into infrastructure health. Prometheus has become the de facto standard for metrics collection in crypto operations, scraping data from instrumented nodes and storing time-series data. Grafana transforms these metrics into visual dashboards showing request rates, latencies, error percentages, and resource utilization.

OpenTelemetry is increasingly used for distributed tracing, allowing teams to follow individual transaction flows through complex infrastructure stacks. Log aggregation tools like Loki or ELK stacks collect and index logs from all components for troubleshooting and analysis.

Consider a practical example: A DeFi application running on Ethereum might rely on Infura's managed RPC service for routine queries about token prices and user balances. The same application might run its own validator on Polygon to participate in that network's consensus and earn staking rewards.

For complex analytics queries, the application might host a custom Graph indexer tracking liquidity pool events and trades. Behind the scenes, all these components are monitored through Grafana dashboards showing RPC latency, validator uptime, indexer lag behind chain tip, and alert thresholds configured to page on-call engineers when issues arise.

This stack represents just the baseline. More sophisticated setups include multiple redundant nodes per chain, backup RPC providers, automated failover mechanisms, and comprehensive disaster recovery plans. The complexity scales with the number of chains supported, the criticality of uptime requirements, and the sophistication of services offered.

Managed Infrastructure Providers vs. Self-Hosted Setups

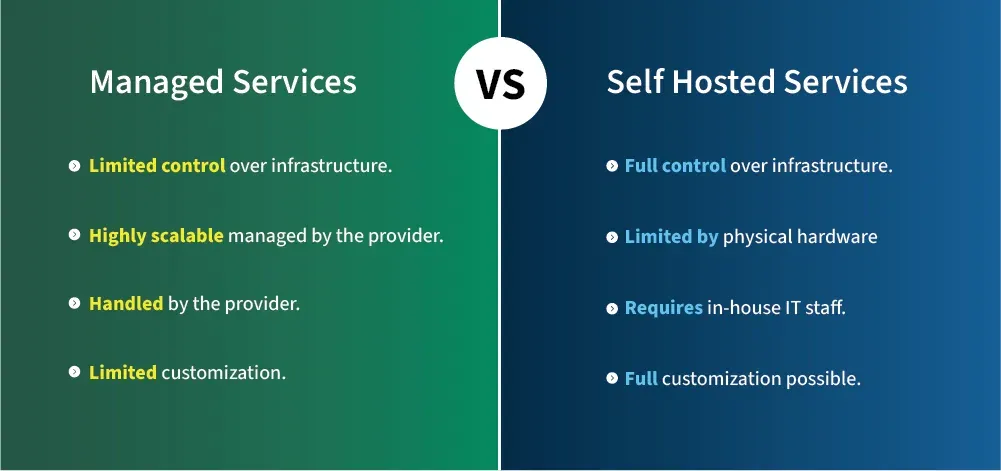

Crypto teams face a fundamental operational decision: rely on managed infrastructure providers or build and maintain their own systems. This choice involves significant trade-offs in cost, control, reliability, and strategic positioning.

Managed RPC providers emerged to solve infrastructure complexity for application developers. Services like Infura, Alchemy, QuickNode, Chainstack, and Blockdaemon offer instant access to blockchain nodes across multiple networks without operational overhead. Developers sign up, receive API keys, and immediately start querying chains through provided endpoints. These providers handle node maintenance, scaling, upgrades, and monitoring.

The advantages of managed services are substantial. Rapid scalability allows applications to handle traffic surges without provisioning infrastructure. Multi-chain coverage means developers access dozens of networks through a single provider relationship rather than operating nodes for each chain. Enterprise support provides expert assistance when issues arise.

Managed providers typically offer higher SLA guarantees than teams could achieve independently without significant investment. For startups and small teams, managed services eliminate the need to hire specialized DevOps staff and dramatically reduce time to market.

However, managed infrastructure introduces dependencies that concern serious protocols. Centralization risk represents the most significant concern. When many applications rely on the same handful of providers, those providers become potential points of failure or censorship. If Infura experiences outages, significant portions of the Ethereum ecosystem can become inaccessible simultaneously.

This happened in November 2020 when an Infura outage prevented users from accessing MetaMask and many DeFi applications. The incident highlighted how decentralized applications remained dependent on centralized infrastructure.

Vendor dependency creates additional risks. Applications relying heavily on a provider's specific API features or optimizations face significant switching costs. Pricing changes, service degradations, or provider business failures can force disruptive migrations. Privacy exposure matters for applications handling sensitive data, as managed providers can potentially observe all RPC requests, including user addresses and transaction patterns.

Self-hosted infrastructure offers maximum control and aligns better with Web3's decentralization ethos. Running internal node clusters, custom APIs, and monitoring stacks allows teams to optimize performance for specific use cases, implement custom caching strategies, and maintain complete data privacy.

Compliance requirements for regulated entities often mandate on-premise infrastructure with documented custody of sensitive data. Self-hosted setups enable teams to choose specialized hardware, optimize for specific chains, and avoid sharing resources with other tenants.

The costs of self-hosting are substantial. Infrastructure requires meaningful capital investment in hardware or cloud resources. Maintenance overhead includes managing operating system updates, blockchain client upgrades, security patches, and capacity planning. Running blockchain nodes 24/7 demands either on-call rotations or paying for always-available engineering staff. Achieving high availability comparable to managed providers requires redundant infrastructure across multiple geographic regions.

Real-world approaches often combine both models strategically. Uniswap, one of the largest decentralized exchanges, uses multiple RPC providers to avoid single points of failure. The Uniswap interface can fail over between providers automatically if one becomes unavailable or slow.

Coinbase, operating at massive scale with strict compliance requirements, built extensive internal infrastructure through Coinbase Cloud while also partnering with external providers for specific chains or redundancy. The Ethereum Foundation maintains public RPC endpoints for testnets, ensuring developers can access these networks even without paid services.

Protocol maturity influences decisions significantly. Early-stage projects typically start with managed providers to validate product-market fit quickly without infrastructure distractions. As protocols grow and stakes increase, they gradually build internal capabilities, starting with critical components like validators for chains where they stake significant capital. Mature protocols often run hybrid setups, self-hosting primary infrastructure for control while maintaining managed service relationships as backup or for less critical chains.

The economics of the decision depend heavily on scale. For applications serving thousands of requests per month, managed providers offer far better economics than the fixed costs of running nodes. At millions of requests monthly, self-hosted infrastructure often becomes more cost-effective despite higher operational complexity. Beyond pure economics, strategic considerations around decentralization, data privacy, and platform risk drive infrastructure decisions for protocols handling significant value.

Uptime, Reliability, and Service Level Agreements

In traditional web applications, downtime is inconvenient. Users wait briefly and retry. In crypto infrastructure, downtime can be catastrophic. Traders unable to access exchanges during volatile markets suffer losses. DeFi users facing liquidation events cannot add collateral if their wallets cannot connect to the protocol. Validators offline during their assigned slots lose rewards and face slashing penalties. The financial nature of blockchain applications elevates infrastructure reliability from operational concern to existential requirement.

Service Level Agreements quantify reliability expectations. An SLA of 99.9 percent uptime, often called "three nines," allows approximately 43 minutes of downtime monthly. Many consumer services operate at this level acceptably. Enterprise crypto infrastructure targets 99.99 percent, or "four nines," permitting only about four minutes monthly downtime.

The most critical infrastructure, such as major exchange systems or large validator operations, aims for 99.999 percent, allowing merely 26 seconds monthly downtime. Each additional nine of reliability becomes exponentially more expensive to achieve.

Professional crypto DevOps teams achieve high availability through redundancy at every infrastructure layer. Multi-region deployment distributes infrastructure across geographically separate locations. Cloud providers offer regions spanning continents, allowing applications to survive entire data center failures.

Some teams deploy across multiple cloud providers, mixing AWS, Google Cloud, and DigitalOcean to avoid single provider risk. Others combine cloud instances with bare metal servers in colocation facilities for cost optimization and vendor independence.

Failover systems detect failures automatically and route traffic to healthy components. Load balancers continuously health-check backend RPC nodes, removing unresponsive instances from rotation. Backup nodes remain synchronized and ready to assume primary roles when needed. Some sophisticated setups use automated deployment tools to spin up replacement infrastructure within minutes when failures occur, leveraging infrastructure as code to recreate systems reproducibly.

Load balancing strategies go beyond simple round-robin request distribution. Geographic routing sends users to the nearest regional infrastructure, minimizing latency while providing redundancy if regions fail. Weighted routing can gradually shift traffic during deployments or when testing new infrastructure. Some teams implement circuit breakers that detect degraded nodes through increased error rates or latency and temporarily remove them from rotation automatically.

Chain-specific challenges complicate achieving consistent uptime. Solana experienced multiple significant outages through 2022 and 2023 where the entire network halted, requiring validator coordination to restart. No amount of infrastructure redundancy helps when the underlying blockchain stops producing blocks.

Avalanche's subnet architecture creates scaling benefits but requires infrastructure teams to run nodes for multiple subnets, multiplying operational complexity. Ethereum's proof-of-stake transition introduced new considerations around validator effectiveness and avoiding slashing conditions.

Ethereum's gas price volatility creates another operational challenge. During network congestion, transaction costs spike unpredictably. Infrastructure handling many transactions must implement sophisticated gas management strategies, including dynamic gas price algorithms, transaction retry logic, and sometimes subsidizing user transactions during extreme conditions.

Failure to properly manage gas can cause transactions to fail or remain pending indefinitely, effectively creating application outages even when infrastructure operates correctly.

Validator operations face unique uptime requirements. Proof-of-stake validators must remain online and responsive to avoid missing their assigned attestation and proposal duties. Missing attestations reduces validator rewards, while extended downtime can trigger slashing, burning a portion of staked capital.

Professional staking operations achieve extremely high uptime through dedicated hardware, redundant networking, automated failover between primary and backup validators, and sophisticated monitoring alerting on missed attestations within seconds.

The intersection of blockchain protocol risk and infrastructure reliability creates interesting dynamics. Teams must balance maximizing uptime of their own infrastructure against participating in occasionally unreliable networks.

When Solana halted, professional infrastructure teams documented incidents, coordinated on validator restarts, and communicated transparently with customers about circumstances beyond their control. These incidents highlight that crypto DevOps extends beyond maintaining servers to participating actively in protocol-level incident response across public networks.

Observability and Monitoring

Professional crypto infrastructure teams operate under a fundamental principle: you cannot manage what you cannot measure. Comprehensive observability separates reliable operations from those constantly fighting fires. In systems where issues often cascade quickly and financial stakes are high, detecting problems early and diagnosing them accurately becomes critical.

Observability in Web3 infrastructure encompasses three pillars: metrics, logs, and traces. Metrics provide quantitative measurements of system state and behavior over time. CPU utilization, memory consumption, disk I/O, network throughput all indicate resource health. Crypto-specific metrics include node peer count, indicating healthy network connectivity; synchronization lag, showing how far behind the chain tip a node has fallen; RPC request rates and latencies, revealing application load and responsiveness; and block production rates for validators.

Prometheus has become the standard metrics collection system in crypto DevOps. Blockchain clients increasingly expose Prometheus-compatible metrics endpoints that scrape collectors query periodically. Teams define recording rules to pre-aggregate common queries and alerting rules that evaluate metric thresholds continuously. Prometheus stores time-series data efficiently, enabling historical analysis and trend identification.

Grafana transforms raw metrics into visual dashboards accessible to both technical and non-technical stakeholders. Well-designed dashboards show infrastructure health at a glance through color-coded panels, trend graphs, and clear warning indicators.

Teams typically maintain several dashboard levels: high-level overviews for executives showing aggregate uptime and request success rates, operational dashboards for DevOps teams showing detailed resource utilization and performance metrics, and specialized dashboards for specific chains or components showing protocol-specific metrics.

Logs capture detailed event information explaining what systems are doing and why issues occur. Application logs record significant events like transaction processing, API requests, and errors. System logs document operating system and infrastructure events.

Blockchain nodes generate logs about peer connections, block reception, consensus participation, and validation errors. During incidents, logs provide the detailed context needed to understand failure root causes.

Log aggregation systems collect logs from distributed infrastructure into centralized queryable stores. Loki, often used alongside Grafana, provides lightweight log aggregation with powerful query capabilities. The Elasticsearch, Logstash, Kibana (ELK) stack offers more features but requires more resources.

Structured logging, where applications output logs in JSON format with consistent fields, dramatically improves log searchability and enables automated analysis.

Distributed tracing follows individual requests through complex infrastructure stacks. In crypto operations, a single user transaction might touch a load balancer, get routed to an RPC node, trigger smart contract execution, generate events captured by an indexer, and update caches.

Tracing instruments each component to record timing and context, allowing teams to visualize complete request flows. OpenTelemetry has emerged as the standard tracing framework, with support growing across blockchain infrastructure components.

Professional teams monitor both infrastructure metrics and protocol-level health indicators. Infrastructure metrics reveal resource constraints, network issues, and software problems.

Protocol metrics expose chain-specific concerns like validator participation rates, mempool sizes, and consensus issues. Some problems manifest primarily in protocol metrics while infrastructure appears healthy, such as when a node loses peer connectivity due to network partitioning but continues running otherwise normally.

Alerting transforms metrics into actionable notifications. Teams define alert rules based on metric thresholds, such as RPC latency exceeding 500 milliseconds, node peer count dropping below 10, or indexer synchronization lag exceeding 100 blocks.

Alert severity levels distinguish between issues requiring immediate attention and those that can wait for business hours. Integration with incident management platforms like PagerDuty or Opsgenie ensures the right people are notified through the appropriate channels based on severity and on-call schedules.

Status pages provide transparency about infrastructure health to users and partners. Tools like UptimeRobot, Statuspage, or BetterStack monitor service availability and display public dashboards showing current status and historical uptime. Major providers maintain detailed status pages with component-level granularity, allowing users to see which specific chains or features are experiencing issues.

Example monitoring workflows illustrate observability in action. When RPC latency increases, alerts trigger immediately. On-call engineers open dashboards showing RPC node metrics and quickly identify one node processing significantly more requests than others due to load balancer misconfiguration. They rebalance traffic and verify latency returns to normal. Logs confirm the issue began after a recent deployment, prompting rollback of that change. Traces show which endpoints experienced the highest latency, guiding optimization efforts.

Another common scenario involves synchronization lag detection. An indexer falls behind the chain tip after a period of high transaction volume. Alerts fire when lag exceeds thresholds. Engineers examining logs discover the indexer's database performing slowly due to missing indices on recently added tables. They add appropriate indices, and synchronization catches up. Postmortem analysis leads to automated testing of indexer performance before deployments to prevent recurrence.

Incident Response and Crisis Management

Despite careful planning and robust infrastructure, incidents occur. Network issues, software bugs, hardware failures, and protocol-level problems eventually affect even the best-operated systems. How teams respond to incidents separates mature operations from amateur ones. In crypto, where incidents can rapidly evolve into user-affecting outages or financial losses, rapid and systematic incident response is essential.

Professional crypto DevOps teams maintain 24/7 on-call rotations. At any moment, designated engineers are available to respond within minutes to production alerts. On-call responsibilities rotate among qualified team members, typically changing weekly to prevent burnout. Teams must be staffed adequately across time zones to avoid individual engineers enduring excessive on-call burdens. For critical infrastructure, teams often maintain primary and secondary on-call rotations, ensuring backup coverage if the primary responder is unavailable.

Automated alerting systems form the backbone of incident detection. Rather than humans watching dashboards continuously, monitoring systems evaluate conditions constantly and page engineers when thresholds breach. Integration with platforms like PagerDuty or Opsgenie handles alert routing, escalation policies, and acknowledgment tracking. Well-configured alerting balances sensitivity, catching real issues quickly, against specificity, avoiding alert fatigue from false positives that train engineers to ignore notifications.

When incidents occur, structured response processes guide actions. Engineers receiving alerts acknowledge them immediately, signaling awareness and preventing escalation. They quickly assess severity using predefined criteria. Severity 1 incidents involve user-facing outages or data loss requiring immediate all-hands response. Severity 2 incidents affect functionality degraded but not completely unavailable. Lower severity incidents can wait for business hours.

Incident communication is crucial. Teams establish dedicated communication channels, often Slack channels or dedicated incident management platforms, where responders coordinate. Regular status updates to stakeholders prevent duplicate investigation and keep management informed. For user-facing incidents, updates to status pages and social media channels set expectations and maintain trust.

Common failure types in crypto infrastructure include node desynchronization, where blockchain clients fall out of consensus with the network due to software bugs, network partitions, or resource exhaustion. Recovery often requires restarting nodes, potentially re-syncing from snapshots. RPC overload occurs when request volume exceeds infrastructure capacity, causing timeouts and errors. Immediate mitigations include rate limiting, activating additional capacity, or failing over to backup providers.

Indexer crashes can stem from software bugs when processing unexpected transaction patterns or database capacity issues. Quick fixes might involve restarting with increased resources, while permanent solutions require code fixes or schema optimizations. Smart contract event mismatches happen when indexers expect specific event formats but contracts emit differently, causing processing errors. Resolution requires either updating indexer logic or understanding why contracts behave unexpectedly.

The Solana network outages of 2022 provide instructive examples of large-scale incident response in crypto. When the network halted due to resource exhaustion from bot activity, validator operators worldwide coordinated through Discord and Telegram channels to diagnose issues, develop fixes, and orchestrate network restarts. Infrastructure teams simultaneously communicated with users about the situation, documented timelines, and updated status pages. The incidents highlighted the unique challenges of decentralized incident response where no single authority controls infrastructure.

Ethereum RPC congestion events illustrate different challenges. During significant market volatility or popular NFT mints, RPC request volumes spike dramatically. Providers face difficult decisions about rate limiting, which protects infrastructure but frustrates users, versus accepting degraded performance or outages. Sophisticated providers implement tiered service levels, prioritizing paid customers while rate limiting free tiers more aggressively.

Root cause analysis and postmortem culture are hallmarks of mature operations. After resolving incidents, teams conduct blameless postmortems analyzing what happened, why it happened, and how to prevent recurrence. Postmortem documents include detailed incident timelines, contributing factors, impact assessment, and concrete action items with assigned owners and deadlines. The blameless aspect is crucial: postmortems focus on systemic issues and process improvements rather than individual blame, encouraging honest analysis and learning.

Action items from postmortems drive continuous improvement. If an incident resulted from missing monitoring, teams add relevant metrics and alerts. If inadequate documentation slowed response, they improve runbooks. If a single point of failure caused the outage, they architect redundancy. Tracking and completing postmortem action items prevents recurring incidents and builds organizational knowledge.

Scaling Strategies for Web3 Infrastructure

Scaling blockchain infrastructure differs fundamentally from scaling traditional web applications, requiring specialized strategies that account for the unique constraints of decentralized systems. While Web2 applications can often scale horizontally by adding more identical servers behind load balancers, blockchain infrastructure involves components that cannot simply be replicated to increase capacity.

The critical limitation is that blockchains themselves cannot horizontally scale for consensus throughput. Adding more validator nodes to a proof-of-stake network does not increase transaction processing capacity; it simply distributes validation across more participants. The network's throughput is determined by protocol parameters like block size, block time, and gas limits, not by how much infrastructure operators deploy. This fundamental constraint shapes all scaling approaches.

Where horizontal scaling does help is read capacity. Running multiple RPC nodes behind load balancers allows infrastructure to serve more concurrent queries about blockchain state. Each node maintains a complete copy of the chain and can answer read requests independently. Professional setups deploy dozens or hundreds of RPC nodes to handle high request volumes. Geographic distribution places nodes closer to users worldwide, reducing latency through reduced network distance.

Load balancing between RPC nodes requires intelligent algorithms beyond simple round-robin distribution. Least-connection strategies route requests to nodes handling the fewest active connections, balancing load dynamically. Weighted algorithms account for nodes with different capacities, sending proportionally more traffic to powerful servers. Health checking continuously tests node responsiveness, removing degraded nodes from rotation before they cause user-visible errors.

Caching dramatically reduces backend load for repetitive queries. Many blockchain queries request data that changes infrequently, such as token metadata, historical transaction details, or smart contract code. Caching these responses in Redis, Memcached, or CDN edge locations allows serving repeated requests without hitting blockchain nodes. Cache invalidation strategies vary by data type: completely immutable historical data can be cached indefinitely, while current state requires short time-to-live values or explicit invalidation on new blocks.

Content delivery networks extend caching globally. For static content like token metadata or NFT images, CDNs cache copies at edge locations worldwide, serving users from the nearest geographic point of presence. Some advanced setups cache even dynamic blockchain queries at edge locations with very short TTLs, dramatically improving response times for frequently accessed data.

Indexers require different scaling approaches since they must process every block and transaction. Sharded indexing architectures split blockchain data across multiple indexer instances, each processing a subset of contracts or transaction types.

This parallelism increases processing capacity but requires coordination to maintain consistency. Data streaming architectures like Apache Kafka allow indexers to consume blockchain events through publish-subscribe patterns, enabling multiple downstream consumers to process data independently at different rates.

Integration with Layer 2 solutions and rollups provides alternative scaling approaches. Optimistic and zero-knowledge rollups batch transactions off-chain, posting compressed data to Layer 1 for security. Infrastructure supporting Layer 2s requires running rollup-specific nodes and sequencers, adding complexity but enabling much higher transaction throughput. Querying rollup state requires specialized infrastructure that understands rollup architecture and can provide consistent views across Layer 1 and Layer 2 states.

Archive nodes versus pruned nodes represent another scaling trade-off. Full archive nodes store every historical state, enabling queries about any past blockchain state but requiring massive storage (multiple terabytes for Ethereum). Pruned nodes discard old state, keeping only recent history and the current state, dramatically reducing storage requirements but limiting historical query capabilities. Teams choose based on their needs: applications requiring historical analysis need archive nodes, while those querying only current state can use pruned nodes more economically.

Specialized infrastructure for specific use cases enables focused optimizations. Rather than running general-purpose nodes handling all query types, some teams deploy nodes optimized for specific patterns. Nodes with additional RAM might cache more state for faster queries. Nodes with fast SSDs prioritize read latency. Nodes on high-bandwidth connections handle streaming real-time event subscriptions efficiently. This specialization allows meeting different performance requirements cost-effectively.

Rollups-as-a-service platforms introduce additional scaling dimensions. Services like Caldera, Conduit, and Altlayer allow teams to deploy application-specific rollups with customized parameters. These app-chains provide dedicated throughput for specific applications while maintaining security through settlement on established Layer 1 chains. Infrastructure teams must operate sequencers, provers, and bridges, but gain control over their own throughput and gas economics.

Modular blockchain architectures emerging with Celestia, Eigenlayer, and similar platforms separate consensus, data availability, and execution layers. This composability allows infrastructure teams to mix and match components, potentially scaling different aspects independently. A rollup might use Ethereum for settlement, Celestia for data availability, and its own execution environment, requiring infrastructure spanning multiple distinct systems.

The future scaling roadmap involves increasingly sophisticated architectural patterns. Zero-knowledge proof generation for validity rollups requires specialized hardware, often GPUs or custom ASICs, adding entirely new infrastructure categories. Parallel execution environments promise increased throughput through better utilization of modern multi-core processors but require infrastructure updates to support these execution models.

Cost Control and Optimization

Running blockchain infrastructure is expensive, with costs spanning compute resources, storage, bandwidth, and personnel. Professional teams balance reliability and performance against economic constraints through careful cost management and optimization strategies.

Infrastructure cost drivers vary by component type. Node hosting costs include compute instances or physical servers, which must remain online continuously. Ethereum full nodes require powerful machines with fast CPUs, 16GB+ RAM, and high-speed storage. Validator operations demand even higher reliability, often justified dedicated hardware. Cloud instance costs accumulate continuously; even modest nodes cost hundreds of dollars monthly per instance, multiplying across chains and redundant deployments.

Bandwidth represents a significant cost, particularly for popular RPC endpoints. Each blockchain query consumes bandwidth, and high-traffic applications can transfer terabytes monthly. Archive nodes serving historical data transfer especially high volumes. Cloud providers charge separately for outbound bandwidth, sometimes at surprisingly high rates. Some teams migrate to providers with more favorable bandwidth pricing or use bare metal hosting at colocation facilities with flat-rate bandwidth.

Storage costs grow relentlessly as blockchains accumulate history. Ethereum's chain exceeds 1TB for full archive nodes and continues growing. High-performance NVMe SSDs needed for acceptable node performance cost significantly more than traditional spinning disks. Teams provision storage capacity with growth projections, avoiding expensive emergency expansions when disks fill.

Data access through managed RPC providers follows different economics. Providers typically charge per API request or through monthly subscription tiers with included request quotas. Pricing varies significantly between providers and scales with request volume. Applications with millions of monthly requests face potentially substantial bills. Some providers offer volume discounts or custom enterprise agreements for large customers.

Optimization strategies start with right-sizing infrastructure. Many teams overprovision resources conservatively, running nodes with excess capacity that remains unused most of the time. Careful monitoring reveals actual resource utilization, enabling downsizing to appropriately sized instances. Cloud environments make this easy through instance type changes, though teams must balance savings against reliability risks from operating closer to capacity limits.

Elastic scaling uses cloud provider auto-scaling capabilities to expand capacity during traffic peaks and contract during quiet periods. This works well for horizontally scalable components like RPC nodes, where additional instances can be launched within minutes when request rates increase and terminated when load decreases. Elastic scaling reduces costs by avoiding continuously running capacity needed only occasionally.

Spot instances and preemptible VMs offer dramatically reduced compute costs in exchange for accepting that cloud providers can reclaim instances on short notice. For fault-tolerant workloads like redundant RPC nodes, spot instances reduce costs by 60-80 percent. Infrastructure must handle instance terminations gracefully, automatically replacing lost instances from pools and ensuring sufficient redundant capacity that losing individual instances doesn't impact availability.

Pruning full nodes trades historical query capability for reduced storage requirements. Most applications need only current blockchain state, not complete history. Pruned nodes maintain consensus participation and can serve current state queries while consuming fraction the storage of archive nodes. Teams maintain a few archive nodes for specific historical queries while running primarily pruned nodes.

Choosing between archive and non-archive nodes depends on application requirements. Archive nodes are necessary for applications querying historical state, such as analytics platforms or block explorers. Most DeFi and NFT applications need only current state, making expensive archive nodes unnecessary. Hybrid approaches maintain one archive node per chain for occasional historical queries while using pruned nodes for routine operations.

Caching and query optimization dramatically reduce redundant node load. Applications often repeatedly query the same data, such as token prices, ENS names, or popular smart contract state. Implementing application-level caching with appropriate invalidation policies prevents repeatedly querying nodes for unchanged data. Some teams analyze query patterns to identify optimization opportunities, adding specialized caches or precomputed results for common query types.

Reserved instances for predictable baseline capacity provide significant cloud cost savings compared to on-demand pricing. Most blockchain infrastructure requires continuous operation, making reserved instances with one or three-year commitments attractive. Teams reserve capacity for baseline needs while using on-demand or spot instances for peak capacity, optimizing costs across the fleet.

Multi-cloud and bare metal strategies reduce vendor lock-in and optimize costs. Deploying across AWS, Google Cloud, and DigitalOcean allows choosing the most cost-effective provider for each workload. Bare metal servers in colocation facilities offer better economics at scale with predictable monthly costs, though requiring more operational expertise. Hybrid approaches maintain cloud presence for flexibility while migrating stable workloads to owned hardware.

Monitoring and analyzing costs continuously is essential for optimization. Cloud providers offer cost management tools showing spending patterns by resource type. Teams set budgets, configure spending alerts, and regularly review costs to identify unexpected increases or optimization opportunities. Tagging resources by project, team, or purpose enables understanding which applications drive costs and where optimization efforts should focus.

Provider pricing models vary significantly and bear careful comparison. Alchemy offers pay-as-you-go and subscription plans with different rate limits. QuickNode prices by request credits. Chainstack provides dedicated nodes under subscription plans. Understanding these models and monitoring usage allows choosing the most economical provider for specific needs. Some applications use different providers for different chains based on relative pricing.

The build versus buy decision involves comparing total cost of ownership. Managed services cost predictably but accumulate continuously. Self-hosted infrastructure has higher initial costs and ongoing personnel expenses but potentially lower unit costs at scale. The break-even point depends on request volumes, chains supported, and team capabilities. Many protocols start with managed services and graduate to self-hosted infrastructure as scale justifies the investment.

Multi-Chain Operations and Interoperability Challenges

Modern crypto applications increasingly operate across multiple blockchains, serving users on Ethereum, Polygon, Arbitrum, Avalanche, Solana, and numerous other chains. Multi-chain operations multiply infrastructure complexity, requiring teams to manage heterogeneous systems with different architectures, tooling, and operational characteristics.

EVM-compatible chains, including Ethereum, Polygon, BNB Smart Chain, Avalanche C-Chain, and Layer 2s like Arbitrum and Optimism, share similar infrastructure requirements. These chains run compatible node software like Geth or its forks, expose JSON-RPC APIs with consistent methods, and use the same tools for operations. DevOps teams can often reuse deployment templates, monitoring configurations, and operational runbooks across EVM chains with minor adjustments for chain-specific parameters.

However, even EVM chains have meaningful differences requiring specific operational knowledge. Polygon's high transaction throughput requires nodes with greater I/O capacity than Ethereum. Arbitrum and Optimism rollups introduce additional components like sequencers and fraud-proof systems that infrastructure teams must understand and operate. Avalanche's subnet architecture potentially requires running nodes for multiple subnets simultaneously. Gas price dynamics vary dramatically between chains, requiring chain-specific transaction management strategies.

Non-EVM chains introduce entirely different operational paradigms. Solana uses its own validator client written in Rust, requiring different hardware specifications, monitoring approaches, and operational procedures than Ethereum. Solana nodes need powerful CPUs and fast networking due to high throughput and gossip protocol intensity. The operating model differs fundamentally: Solana's state grows more slowly than Ethereum but requires different backup and snapshot strategies.

Aptos and Sui represent another architectural family with the Move programming language and different consensus mechanisms. These chains require learning entirely new node operation procedures, deployment patterns, and troubleshooting approaches. Move-based chains may require understanding new transaction formats, state models, and execution semantics compared to EVM experience.

Cosmos-based chains using the Tendermint consensus engine introduce yet another operational model. Each Cosmos chain potentially uses different application-specific logic built on the Cosmos SDK while sharing common consensus layer characteristics. Infrastructure teams operating multiple Cosmos chains must manage numerous independent networks while leveraging shared operational knowledge about Tendermint.

Tooling fragmentation across chains creates significant operational challenges. Monitoring Ethereum nodes uses well-established tools like Prometheus exporters built into major clients. Solana monitoring requires different exporters exposing chain-specific metrics. Each blockchain ecosystem develops its own monitoring tools, logging standards, and debugging utilities. Teams operating many chains either accept tool fragmentation, running different monitoring stacks per chain, or invest in building unified observability platforms abstracting chain differences.

Indexing infrastructure faces similar heterogeneity. The Graph protocol, dominant in Ethereum indexing, has expanding support for other EVM chains and some non-EVM chains, but coverage remains incomplete. Solana uses different indexing solutions like Pyth or custom indexers. Creating consistent indexing capabilities across all chains often requires operating multiple distinct indexing platforms and potentially building custom integration layers.

Alert complexity scales multiplically with chain count. Each chain needs monitoring for synchronization status, peer connectivity, and performance metrics. Validator operations on multiple chains require tracking distinct staking positions, reward rates, and slashing conditions. RPC infrastructure serves different endpoints per chain with potentially different performance characteristics. Aggregating alerts across chains while maintaining enough granularity for rapid troubleshooting challenges incident management systems.

Multi-chain dashboard design requires balancing comprehensive visibility against information overload. High-level dashboards show aggregate health across all chains, with individual chain drill-downs for details. Color coding and clear labeling help operators quickly identify which chain experiences issues. Some teams organize monitoring around services rather than chains, creating dashboards for RPC infrastructure, validator operations, and indexing infrastructure that include metrics across all relevant chains.

Deployment and configuration management grows complex with chain count. Infrastructure as code tools like Terraform help manage complexity by defining infrastructure programmatically. Teams create reusable modules for common patterns like "deploy RPC node" or "configure monitoring" that work across chains with appropriate parameters. Configuration management systems like Ansible or SaltStack maintain consistency across instances and chains.

Staffing for multi-chain operations requires balancing specialization against efficiency. Some teams assign specialists per chain who develop deep expertise in specific ecosystems. Others train operators across chains, accepting shallower per-chain expertise in exchange for operational flexibility. Mature teams often blend approaches: general operators handle routine tasks across all chains while specialists assist with complex issues and lead for their chains.

Cross-chain communication infrastructure introduces additional operational layers. Bridge operations require running validators or relayers monitoring multiple chains simultaneously, detecting events on source chains, and triggering actions on destination chains. Bridge infrastructure must handle concurrent multi-chain operations while maintaining security against relay attacks or censorship. Some sophisticated protocols operate their own bridges, adding significant complexity to infrastructure scope.

The heterogeneity of multi-chain operations creates natural pressure toward modular architectures and abstraction layers. Some teams build internal platforms abstracting chain-specific differences behind unified APIs. Others adopt emerging multi-chain standards and tools aiming to provide consistent operational interfaces across chains. As the industry matures, improved tooling and standardization may reduce multi-chain operational complexity, but current reality requires teams managing substantial heterogeneity.

Security, Compliance, and Key Management

Crypto infrastructure operations involve substantial security considerations extending beyond typical DevOps practices. The financial nature of blockchain systems, permanence of transactions, and cryptographic key management requirements demand heightened security discipline throughout infrastructure operations.

Protecting API keys and credentials represents a fundamental security practice. RPC endpoints, cloud provider access keys, monitoring service credentials, and infrastructure access tokens all require careful management. Exposure of production API keys could allow unauthorized access to infrastructure or sensitive data. Teams use secrets management systems like HashiCorp Vault, AWS Secrets Manager, or Kubernetes secrets to store credentials encrypted and access-controlled. Automated rotation policies periodically regenerate credentials, limiting exposure windows if breaches occur.

Node security starts with network-level protection. Blockchain nodes must be reachable by peers but not open to arbitrary access from the internet. Firewalls restrict inbound connections to required ports only, typically peer-to-peer gossip protocols and administrator SSH access. RPC endpoints serving applications face the internet but implement rate limiting to prevent denial of service attacks. Some teams deploy nodes behind VPNs or within private networks, exposing them through carefully configured load balancers with DDoS protection.

DDoS protection is essential for publicly accessible infrastructure. Distributed denial of service attacks flood infrastructure with traffic, attempting to overwhelm capacity and cause outages. Cloud-based DDoS mitigation services like Cloudflare filter malicious traffic before it reaches infrastructure. Rate limiting at multiple layers constrains request rates per IP address or API key. Some infrastructure implements proof-of-work or stake-based rate limiting where requesters must demonstrate computational work or stake tokens to prevent spam.

TLS encryption protects data in transit. All RPC endpoints should use HTTPS with valid TLS certificates rather than unencrypted HTTP. This prevents eavesdropping on blockchain queries, which might reveal trading strategies or user behavior. Websocket connections for real-time subscriptions similarly require TLS protection. Certificate management tools like Let's Encrypt automate certificate issuance and renewal, removing excuses for unencrypted communications.

Access control follows the principle of least privilege. Engineers receive only the minimum permissions necessary for their roles. Production infrastructure access is restricted to senior operators with documented need. Multi-factor authentication requirements protect against credential theft. Audit logging records all infrastructure access and changes, enabling forensic analysis if security incidents occur.

Validator operations introduce specific key management challenges. Validator signing keys must remain secure, as compromise allows attackers to propose malicious blocks or sign conflicting attestations, resulting in slashing. Professional validator operations use hardware security modules (HSMs) or remote signer infrastructure that maintains signing keys in secure enclaves separate from validator processes. This architecture means even if validator nodes are compromised, signing keys remain protected.

Hot wallets managing operational funds require careful security design. Infrastructure often controls wallets funding gas for transactions or managing protocol operation. While keeping keys online enables automated operations, it increases theft risk. Teams balance automation convenience against security through tiered wallet architectures: small hot wallets for routine operations, warm wallets requiring approval for larger transfers, and cold storage for reserves.

Backup and disaster recovery procedures must protect against both accidental loss and malicious theft. Encrypted backups stored in geographically diverse locations protect critical data including node databases, configuration files, and securely-stored credentials. Recovery procedures are tested regularly to ensure they actually work when needed. Some validator operations maintain complete standby infrastructure that can assume production roles quickly if primary infrastructure fails catastrophically.

Supply chain security has become increasingly important after high-profile compromises. Teams carefully vet software dependencies, preferring well-maintained open source projects with transparent development processes. Dependency scanning tools identify known vulnerabilities in packages. Some security-conscious teams audit critical dependencies or maintain forks with stricter security requirements. Container image scanning checks for vulnerabilities in infrastructure deployment artifacts.

Compliance requirements significantly impact infrastructure operations for regulated entities or those serving institutional customers. SOC 2 Type II certification demonstrates operational controls around security, availability, processing integrity, confidentiality, and privacy. ISO 27001 certification shows comprehensive information security management systems. These frameworks require documented policies, regular audits, and continuous monitoring - overhead that infrastructure teams must plan for and maintain.

Incident response for security events differs from operational incidents. Security incidents require preserving evidence for forensic analysis, potentially notifying affected users or regulators, and coordinating with legal teams. Response playbooks for security scenarios guide teams through these special considerations while still restoring service quickly.

Penetration testing and security audits periodically challenge infrastructure security. External specialists attempt to compromise systems, identifying vulnerabilities before attackers exploit them. These assessments inform security improvement roadmaps and validate control effectiveness. For critical infrastructure, regular auditing becomes part of continuous security verification.

The convergence of financial technology and infrastructure operations means crypto DevOps teams must think like financial system operators regarding security and compliance. As regulatory frameworks expand and institutional adoption increases, infrastructure security and compliance capabilities become competitive differentiators as much as pure technical capabilities.

The Future of Crypto DevOps

The crypto infrastructure landscape continues evolving rapidly, with emerging trends reshaping how teams operate blockchain systems. Understanding these directions helps infrastructure teams prepare for future requirements and opportunities.

Decentralized RPC networks represent a significant evolution from current centralized provider models. Projects like Pocket Network, Ankr, and DRPC aim to decentralize infrastructure itself, distributing RPC nodes across independent operators worldwide. Applications query these networks through gateway layers that route requests to nodes, verify responses, and handle payment.

The vision is eliminating single points of failure and censorship while maintaining performance and reliability through economic incentives. Infrastructure teams may shift from operating internal RPC nodes to participating as node operators in these networks, fundamentally changing operational models.

AI-assisted monitoring and predictive maintenance are beginning to transform operations. Machine learning models trained on historical metrics can detect anomalous patterns indicating developing problems before they cause outages. Predictive capacity planning uses traffic forecasts to scale infrastructure proactively rather than reactively. Some experimental systems automatically diagnose issues and suggest remediation, potentially automating routine incident response. As these technologies mature, they promise reducing operational burden while improving reliability.

Kubernetes has become increasingly central to blockchain infrastructure operations. While blockchain nodes are stateful and not naturally suited to containerized orchestration, Kubernetes provides powerful abstractions for managing complex distributed systems. Container-native blockchain deployments using operators that encode operational knowledge allow scaling infrastructure through declarative manifests.

Helm charts package complete blockchain infrastructure stacks. Service meshes like Istio provide sophisticated traffic management and observability. The Kubernetes ecosystem's maturity and tooling richness increasingly outweigh the overhead of adapting blockchain infrastructure to containerized paradigms.

Data availability and rollup observability represent emerging operational frontiers. Modular blockchain architectures separating execution, settlement, and data availability create new infrastructure categories. Data availability layers like Celestia require operating nodes that store rollup transaction data. Rollup infrastructure introduces sequencers, provers, and fraud-proof verifiers with distinct operational characteristics. Monitoring becomes more complex across modular stacks where transactions flow through multiple chains. New observability tools specifically for modular architectures are emerging to address these challenges.

Zero-knowledge proof systems introduce entirely new infrastructure requirements. Proof generation demands specialized compute, often GPUs or custom ASICs. Proof verification, while lighter, still consumes resources at scale. Infrastructure teams operating validity rollups must manage prover clusters, optimize proof generation efficiency, and ensure proof generation keeps pace with transaction demand. The specialized nature of ZK computation introduces new cost models and scaling strategies unlike previous blockchain infrastructure.

Cross-chain infrastructure is converging toward interoperability standards and protocols. Rather than each bridge or cross-chain application maintaining independent infrastructure, standard messaging protocols like IBC (Inter-Blockchain Communication) or LayerZero aim to provide common infrastructure layers. This standardization potentially simplifies multi-chain operations by reducing heterogeneity, allowing teams to focus on standard protocol implementation rather than navigating many distinct systems.

The professionalization of blockchain infrastructure continues accelerating. Infrastructure-as-a-service providers now offer comprehensive managed services comparable to cloud providers in traditional tech. Specialized infrastructure firms provide turnkey validator operations, covering everything from hardware provisioning to 24/7 monitoring. This service ecosystem allows protocols to outsource infrastructure while maintaining standards comparable to internal operations. The resulting competitive landscape pushes all infrastructure operations toward higher reliability and sophistication.

Regulatory developments will increasingly shape infrastructure operations. As jurisdictions implement crypto-specific regulations, compliance requirements may mandate specific security controls, data residency, transaction monitoring, or operational audits. Infrastructure teams will need to architect systems meeting diverse regulatory requirements across jurisdictions. This might involve geo-specific infrastructure deployments, sophisticated access controls, and comprehensive audit trails - capabilities traditionally associated with financial services infrastructure.

Sustainability and environmental considerations are becoming operational factors. Proof-of-work mining's energy consumption sparked controversy, while proof-of-stake systems dramatically reduced environmental impact. Infrastructure teams increasingly consider energy efficiency in deployment decisions, potentially preferring renewable-powered data centers or optimizing node configurations for efficiency. Some protocols commit to carbon neutrality, requiring infrastructure operations to measure and offset energy consumption.

Economic attacks and MEV (miner/maximum extractable value) present new operational security domains. Infrastructure operators increasingly must understand economic incentives that might encourage malicious behavior. Validators face decisions around MEV extraction versus censorship resistance. RPC operators must guard against timing attacks or selective transaction censorship. The intersection of infrastructure control and economic incentives creates operational security considerations beyond traditional threat models.

The convergence of crypto infrastructure with traditional cloud-native practices continues. Rather than crypto maintaining entirely separate operational practices, tooling and patterns increasingly mirror successful Web2 practices adapted for blockchain characteristics. This convergence makes hiring easier as traditional DevOps engineers can transfer many skills while learning blockchain-specific aspects. It also improves infrastructure quality by leveraging battle-tested tools and practices from other domains.

DevOps in crypto is evolving from technical necessity to strategic capability. Protocols increasingly recognize that infrastructure excellence directly impacts user experience, security, and competitive positioning. Infrastructure teams gain strategic seats at planning tables rather than being seen purely as cost centers. This elevation reflects the maturity of crypto as an industry where operational excellence distinguishes successful projects from those that struggle with reliability issues.

Conclusion: The Quiet Backbone of Web3

Behind every DeFi trade, NFT mint, and on-chain governance vote lies a sophisticated infrastructure layer that few users see but all depend on. Crypto DevOps represents the practical bridge between blockchain's decentralized promise and operational reality. Professional teams managing nodes, RPC endpoints, indexers, and monitoring systems ensure that Web3 applications remain responsive, reliable, and secure around the clock.

The discipline has matured dramatically from early blockchain days when enthusiasts ran nodes on home computers and protocols accepted frequent downtime. Today's crypto infrastructure operations rival traditional financial technology in sophistication, with enterprise-grade monitoring, comprehensive disaster recovery, and rigorous security practices. Teams balance competing demands for decentralization, reliability, cost efficiency, and scalability while managing heterogeneous systems across numerous blockchains.

Yet significant challenges remain. Infrastructure centralization around major RPC providers creates uncomfortable dependencies for supposedly decentralized applications. Multi-chain operations multiply complexity without corresponding improvements in tooling maturity. The rapid evolution of blockchain technology means operational practices often lag protocol capabilities. Security threats constantly evolve as crypto's financial stakes attract sophisticated attackers.

Looking forward, crypto DevOps stands at an inflection point. Decentralized infrastructure networks promise to align infrastructure with Web3's philosophical foundations while maintaining professional-grade reliability. AI-assisted operations may reduce operational burden and improve uptime. Regulatory frameworks will likely mandate enhanced security and compliance capabilities. Modular blockchain architectures introduce new operational layers requiring novel expertise.

Through these changes, one constant remains: crypto infrastructure requires careful operation by skilled teams. The invisible work of DevOps professionals ensures that blockchains keep running, applications remain responsive, and users can trust the infrastructure beneath their transactions. As crypto handles increasingly serious financial activity and integrates more deeply with traditional systems, infrastructure excellence becomes not just technical necessity but strategic imperative.

The field attracts practitioners who combine traditional operations expertise with genuine interest in decentralized systems. They must understand not just servers and networks but consensus mechanisms, cryptography, and the economic incentives that secure blockchains. It's a unique discipline at the intersection of systems engineering, distributed computing, and the practical implementation of decentralization.

Crypto DevOps will remain essential as Web3 grows. Whether blockchains achieve mainstream adoption or remain niche, the systems require professional operation. The protocols managing billions in value, processing millions of daily transactions, and supporting thousands of applications all depend on infrastructure teams working diligently behind the scenes.

That hidden layer - neither glamorous nor often discussed - represents the quiet backbone making Web3 functional. Understanding how it works reveals the often-underappreciated engineering and operational discipline that transforms blockchain's theoretical decentralization into practical systems that actually work.