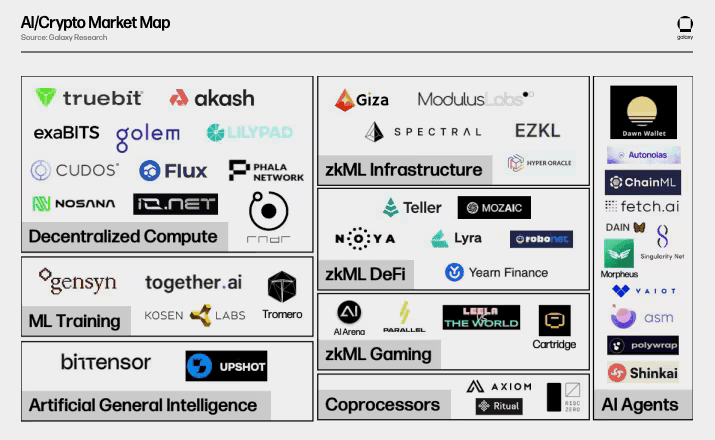

A new category of cryptocurrency is capturing market attention: AI-utility tokens that promise to bridge the digital economy with real-world computational infrastructure. As artificial intelligence reshapes industries from content creation to drug discovery, a parallel evolution is underway in crypto markets where tokens are no longer merely speculative assets but functional tools powering decentralized networks.

Three projects stand at the forefront of this shift: Bittensor (TAO), Fetch.ai (FET), and Render Token (RNDR).

Bittensor operates a decentralized machine learning network where contributors train AI models collaboratively and earn rewards. Fetch.ai deploys autonomous economic agents that execute tasks across supply chains, energy markets and decentralized finance. Render Network transforms idle GPU power into a peer-to-peer marketplace for 3D rendering, visual effects and AI inference.

These tokens represent more than incremental innovation. They signal a potential architectural shift in crypto from "digital gold" narratives centered on scarcity and store-of-value properties toward utility-driven ecosystems where tokens facilitate actual computational work. While Bitcoin and Ethereum established themselves through monetary and platform narratives, AI-utility tokens propose a different value thesis: tokens as access keys to decentralized infrastructure, payment rails for machine-to-machine economies and reward mechanisms for computational resources.

Here we dive deep into why these tokens are trending now, analyze their utility models and tokenomics, evaluates competitive dynamics and narrative risks, explore valuation frameworks, and consider broader implications for how utility tokens may evolve relative to established store-of-value assets.

Why Utility Tokens, Why Now

The convergence of AI acceleration and blockchain infrastructure has created conditions ripe for utility token adoption. Several macro-drivers explain the current momentum.

First, AI compute demand has exploded. Training advanced language models and generating synthetic media requires massive GPU resources, creating bottlenecks in centralized cloud infrastructure. Traditional providers like AWS and Google Cloud have struggled to meet demand, with data centers averaging just 12-18% utilization while GPU shortages persist. This supply-demand imbalance has pushed compute costs higher, making decentralized alternatives economically viable.

Second, earlier crypto cycles focused primarily on DeFi protocols and store-of-value narratives. But by 2024-2025, infrastructure and compute emerged as a dominant theme. The total crypto market cap crossed $4 trillion in 2025, and within that growth, AI-crypto projects captured significant investor attention. Projects offering tangible infrastructure rather than purely financial products gained traction as the market matured.

Third, tokenization offers unique advantages for coordinating distributed resources. Decentralized GPU networks like Render can aggregate idle computing power globally, enabling cost savings of up to 90% compared to centralized alternatives. Tokens provide the economic coordination layer: creators pay for rendering services in RNDR, node operators earn rewards for contributing GPU capacity, and the protocol maintains transparency through blockchain transactions.

The utility model contrasts sharply with store-of-value tokens. Bitcoin's value proposition centers on fixed supply scarcity and its positioning as digital gold. Ethereum adds programmability but still derives significant value from being a settlement layer and store of asset backing. Utility tokens like TAO, FET and RNDR instead derive value from network usage: more AI models trained on Bittensor, more autonomous agents deployed on Fetch.ai, more rendering jobs processed on Render Network theoretically translate into greater token demand.

This shift toward utility is not merely narrative. Render Network processes rendering jobs for major studios using decentralized nodes. Fetch.ai demonstrated real-world applications including autonomous parking coordination in Cambridge and energy trading systems. Bittensor's subnet architecture now includes 128 active subnets focused on different AI domains from text generation to protein folding.

However, utility adoption faces challenges. Most tokens still trade primarily on speculative value rather than usage fundamentals. Token velocity—how quickly tokens change hands - can undermine price stability if users immediately convert rewards to other assets. The question becomes whether these protocols can generate sufficient usage to support their valuations, or whether they remain narrative-driven assets subject to hype cycles.

Token 1: Bittensor (TAO) Deep Dive

What Bittensor Is

Bittensor is an open-source protocol that powers a decentralized machine learning network. Unlike traditional AI development concentrated in tech giants' labs, Bittensor creates a peer-to-peer marketplace where developers contribute machine learning models, validators assess their quality, and contributors earn rewards based on the informational value they provide to the collective intelligence.

The protocol was founded by Jacob Steeves and Ala Shaabana, computer science researchers who launched the network to democratize AI development. The vision is ambitious: create a market for artificial intelligence where producers and consumers interact in a trustless, transparent context without centralized gatekeepers.

Utility and Mechanics

The TAO token serves multiple functions within the ecosystem. Most fundamentally, TAO grants access to the network's collective intelligence. Users extract information from trained models by paying in TAO, while contributors who add value to the network earn more stake. This creates an incentive structure where high-quality model contributions receive greater rewards.

The network operates through a subnet architecture. Each subnet specializes in different AI tasks - natural language processing, image recognition, data prediction - and uses its own evaluation logic. Models compete within subnets based on accuracy and efficiency. Validators stake TAO to assess model outputs and ensure fair scoring. Nominators back specific validators or subnets and share in rewards, similar to delegated proof-of-stake systems.

This modular design allows Bittensor to scale across numerous AI domains simultaneously. Rather than a monolithic network, the protocol functions as infrastructure for specialized AI marketplaces, each with tailored evaluation criteria and reward distributions.

Tokenomics

Bittensor's tokenomics mirror Bitcoin's scarcity model. TAO has a fixed supply of 21 million tokens, with issuance following a halving schedule. The first halving occurred in 2025, cutting daily issuance from 7,200 to 3,600 tokens. This deflationary mechanism creates supply scarcity similar to Bitcoin's four-year cycles.

Currently, approximately 9.6 million TAO tokens are in circulation, representing roughly 46% of total supply. The circulating supply will continue growing but at a decreasing rate due to halvings, with full distribution projected over several decades.

Mining rewards flow to contributors who successfully improve the network's intelligence. Validators earn rewards for accurate assessment of model contributions. This dual reward structure incentivizes both model development and network integrity.

Use Cases

Bittensor's applications span multiple domains. Collective learning allows healthcare institutions to train models on sensitive medical data without sharing underlying information—demonstrated in COVID-19 detection from chest X-rays with 90% accuracy. Financial institutions can collaboratively train fraud detection models while keeping proprietary data private.

The subnet structure enables specialized AI services. Text generation subnets compete to produce high-quality language outputs. Prediction markets leverage Bittensor's inference capabilities. Embedding services process and encode data for downstream applications. Each subnet operates autonomously while contributing to the broader intelligence marketplace.

Enterprise adoption remains nascent but growing. Deutsche Digital Assets and Safello launched the world's first physically-backed Bittensor ETP on the SIX Swiss Exchange in November 2025, providing institutional investors regulated exposure to TAO. This development signals maturing interest beyond retail speculation.

Competition and Ecosystem

Bittensor competes in the decentralized AI arena with projects like SingularityNET (AGIX) and Ocean Protocol (OCEAN). SingularityNET operates an AI marketplace where developers monetize algorithms and services. Ocean focuses on data marketplaces and compute-to-data applications. Each project approaches decentralized AI differently - Bittensor emphasizes collaborative model training, SingularityNET focuses on service marketplaces, Ocean prioritizes data assets.

However, the largest competitive threat comes from centralized AI giants. OpenAI, Google DeepMind and Anthropic command massive resources, proprietary datasets and cutting-edge talent. These entities can iterate faster and deploy more capable models than decentralized alternatives currently achieve. Bittensor must demonstrate that its collaborative approach produces models competitive with centralized alternatives, not just philosophically appealing but technically superior for specific use cases.

The network's WebAssembly (WASM) smart contract upgrade in 2025 expanded functionality, enabling features like lending, automated trading of subnet tokens, and cross-subnet applications. This infrastructure development aims to create a more comprehensive digital economy beyond pure model training.

Narrative Risk and Valuation

Bittensor's valuation faces several tensions. On November 12, 2025, TAO traded around $362-390, with a market capitalization near $3.7-4.1 billion. The token reached highs above $400 earlier in 2025 but experienced volatility typical of crypto assets.

Bulls point to several growth drivers. The halving mechanism creates deflationary pressure, potentially supporting price appreciation if demand remains steady. Analysts project targets ranging from $360-500 in 2026 to more aggressive forecasts exceeding $1,000 by 2027-2030, though these predictions carry significant uncertainty.

The fundamental question is whether network usage justifies valuation. Token velocity theory suggests that utility tokens used primarily for transactions struggle to maintain value because users quickly convert rewards into other assets. Bittensor mitigates this through staking - validators must lock TAO to participate in network consensus, reducing circulating supply and velocity.

However, if Bittensor fails to attract meaningful AI workloads beyond its current subnet activity, the token becomes primarily speculative. The protocol must demonstrate that decentralized model training offers advantages compelling enough to justify developer migration from established frameworks like TensorFlow or PyTorch combined with centralized compute.

Risks include technological competition, regulatory uncertainty around AI systems, potential security vulnerabilities in the protocol, and the challenge of maintaining decentralization as the network scales. The recent 20% weekly dip highlights persistent volatility even as broader institutional interest grows.

Token 2: Fetch.ai (FET) Deep Dive

What Fetch.ai Is

Fetch.ai is a blockchain ecosystem that leverages AI and automation to enable autonomous economic agents—digital entities that independently execute tasks on behalf of users, devices or organizations. Founded in 2017 and launched via IEO on Binance in March 2019, Fetch.ai aims to democratize access to AI technology through a decentralized network.

The platform's defining feature is Autonomous Economic Agents (AEAs). These are software entities that operate with some degree of autonomy, performing tasks like optimizing supply chains, managing smart grid energy distribution, coordinating transportation networks and automating DeFi trading. Agents discover and negotiate with each other via an Open Economic Framework, creating a machine-to-machine economy.

CEO Humayun Sheikh leads a team that envisions AI-based systems breaking the data monopoly held by large tech companies. By distributing AI capabilities across a decentralized network, Fetch.ai positions itself as infrastructure for the "agentic economy"—a future where autonomous agents represent individuals and devices in countless micro-transactions and coordination tasks.

Utility of FET

The FET token serves as the primary medium of exchange in the Fetch.ai ecosystem. When two agents connect, communicate and negotiate, one pays the other for data or services using FET. Importantly, the token supports micro-payments of fractions of a cent, enabling the granular transactions required for a machine-to-machine economy.

FET has several specific functions. It pays for network transaction fees and the deployment of AI services. Developers building autonomous agents pay in FET to access the network's machine learning utilities and computational resources. Users can stake FET to participate in network security through Fetch.ai's Proof-of-Stake consensus mechanism, earning rewards for contributing to validator nodes.

Agents must also deposit FET to register on the network, creating a staking requirement that funds their right to operate. This deposit mechanism ensures agents have economic skin in the game, reducing spam and incentivizing quality contributions.

Tokenomics and Structure

FET exists in multiple forms across different blockchains. Originally launched as an ERC-20 token on Ethereum, Fetch.ai later deployed its own mainnet built in the Cosmos ecosystem. Users can bridge between the native version and ERC-20 format, with the choice affecting transaction fees and compatibility with different DeFi ecosystems.

The maximum supply is approximately 1 billion FET tokens, though exact distribution and vesting schedules vary. The token operates on both Ethereum (for ERC-20 compatibility) and Binance Smart Chain (as a BEP-20 token), with a 1:1 token bridge allowing users to swap between networks based on their needs.

Fetch.ai is part of the Artificial Superintelligence Alliance, a collaboration with SingularityNET and Ocean Protocol announced in 2024. The alliance aims to create a unified decentralized AI ecosystem with combined market capitalization targeting top-20 crypto status. Token holders of AGIX and OCEAN can swap into FET, potentially consolidating liquidity and development efforts across projects.

Use Cases

Fetch.ai's applications span multiple sectors. In smart cities, agents coordinate parking and traffic. A Cambridge pilot demonstrated agents autonomously finding parking spots, bidding for spaces and processing payments in real time. Adding ride-hailing allows the network to dispatch vehicles based on demand patterns.

Energy markets represent another major use case. Homeowners with rooftop solar deploy agents that trade surplus energy directly with neighbors, bypassing centralized utilities. Agents negotiate prices, verify transactions and settle payments in FET, creating a peer-to-peer energy marketplace.

In logistics and supply chain, agents optimize routing, inventory management and carrier selection. A business can deploy an agent that discovers suppliers through the network, negotiates terms, compares prices, checks quality scores, places orders, arranges shipping and handles payments - all autonomously based on predefined parameters.

DeFi automation shows promise. Agents can execute complex trading strategies, optimize liquidity provision across protocols, and manage collateral positions in lending markets. In mid-2025, a Fetch.ai-backed agent won UC Berkeley's hackathon for air traffic coordination, demonstrating capabilities in allocating flight slots, managing delays and negotiating congestion zones among autonomous agents working with live data.

The partnership with Interactive Strength (TRNR) created intelligent fitness coach agents that analyze performance data, suggest customized workouts and negotiate training plans with users, all settled via FET payments.

Competitive Landscape and Risk

Fetch.ai competes with other agent-focused protocols like Autonolas (OLAS), which offers an accelerator program for autonomous agents. Virtuals Protocol emerged in late 2024 as a major competitor, building an AI agent launchpad on Base and Solana with its own ecosystem of tokenized agents.

The broader competitive threat comes from centralized AI platforms. Google, Amazon and Microsoft offer sophisticated AI services through their cloud platforms without requiring users to hold proprietary tokens. For Fetch.ai to succeed, the decentralized agent model must offer clear advantages - privacy preservation, censorship resistance, direct peer-to-peer coordination—that justify the complexity of managing crypto assets.

Regulatory uncertainty poses risks. AI systems that operate autonomously may face scrutiny under emerging regulations. The EU AI Act's risk-based approach could classify Fetch.ai's agents as "high-risk" when operating in sectors like energy or logistics, requiring audits and oversight that increase operational costs.

Skepticism about the agent economy narrative persists. Critics question whether autonomous agents will achieve mainstream adoption or remain a niche technical curiosity. If the machine-to-machine economy fails to materialize at scale, FET becomes a solution seeking a problem.

On November 12, 2025, FET traded around $0.25-0.30, having experienced significant volatility throughout the year. The token gained attention when Interactive Strength announced plans for a $500 million crypto treasury centered on FET, signaling institutional confidence in the project's long-term potential.

Analysts project price targets of $6.71 by 2030, though such forecasts carry substantial uncertainty. The fundamental question is whether agent-based coordination offers enough value to justify token economics, or whether simpler centralized alternatives prevail.

Recent developments show promise. Fetch.ai launched a $10 million accelerator in early 2025 to invest in startups building on its infrastructure. This signals commitment to ecosystem growth beyond speculative trading.

Token 3: Render Token (RNDR) Deep Dive

What Render Network Is

Render Network is a decentralized GPU rendering platform that connects creators needing computational power with individuals and organizations offering idle GPU resources. Originally conceived in 2009 by OTOY CEO Jules Urbach and launched publicly in April 2020, Render has evolved into a leading decentralized physical infrastructure network (DePIN) for graphics and AI workloads.

The network operates as a peer-to-peer marketplace. Creators submit rendering jobs - 3D graphics, visual effects, architectural visualizations, AI inference - to the network. Node operators with spare GPU capacity pick up jobs and process them in exchange for RNDR tokens. The platform leverages OTOY's industry-leading OctaneRender software, providing professional-grade rendering capabilities through distributed infrastructure.

Render Network addresses a fundamental bottleneck: high-quality rendering requires massive GPU power, but centralized cloud services are expensive and may lack capacity during peak demand. By aggregating underutilized GPUs globally, Render democratizes access to professional rendering tools at a fraction of traditional costs.

Utility Token RNDR

The RNDR token (now RENDER after migrating to Solana) serves as the network's native utility token. Creators pay for rendering services in RENDER, with costs determined by GPU power required, measured in OctaneBench (OBH) - a standardized unit developed by OTOY to quantify rendering capacity.

Node operators earn RENDER for completing jobs. The network implements a tiered reputation system: Tier 1 (Trusted Partners), Tier 2 (Priority) and Tier 3 (Economy). Higher-tier node operators charge premium rates but offer guaranteed reliability. Creators' reputation scores influence job assignment speed—those with strong histories access resources faster.

Governance rights accompany RENDER tokens. Holders vote on network upgrades, protocol changes and funding proposals through the Render DAO. This decentralized governance ensures the community shapes the network's evolution rather than a centralized foundation alone.

The Burn-and-Mint Equilibrium mechanism implemented in January 2023 manages token supply dynamically. When creators pay for rendering, 95% of tokens are burned, removing them from circulation. Node operators receive newly minted tokens to maintain economic balance. This design makes RENDER potentially deflationary as network usage grows, since burn rate can exceed mint rate if demand is strong.

Tokenomics

RENDER migrated from Ethereum to Solana in late 2023 following a community vote. This transition aimed to leverage Solana's faster transactions and lower fees. The original RNDR (ERC-20) token on Ethereum was upgraded to RENDER (SPL token) on Solana. The total supply is capped at 644,168,762 tokens, with approximately 517 million in circulation as of 2025.

Token distribution allocated 25% to public sales, 10% to reserves, and 65% held in escrow to modulate supply-demand flows. This reserve allows the foundation to manage token availability as the network scales.

Use Cases

Render Network serves multiple industries. Film and television production companies use the network for visual effects rendering. Major studios have rendered projects using decentralized nodes, demonstrating the system's capability for professional workflows with end-to-end encryption ensuring intellectual property protection.

Gaming developers leverage Render for 3D asset creation and real-time rendering. Metaverse projects rely on the network for generating immersive environments and avatar graphics. The scalability of distributed GPU power allows creators to spin up rendering capacity as needed without investing in expensive local hardware.

Architects and product designers use Render for high-quality 3D visualizations. Architectural firms create virtual reality walkthroughs of buildings before construction. Product designers prototype at scale, testing textures and colors through parallelized GPU rendering.

AI inference represents a growing use case. In July 2025, Render onboarded NVIDIA RTX 5090 GPUs specifically for AI compute workloads in the United States. Training certain AI models, especially those involving image or video generation, benefits from distributed GPU power. The network's infrastructure can accelerate AI training significantly compared to single-machine setups.

Competitive Dynamics

Render competes with both centralized and decentralized providers. Traditional GPU cloud services from AWS, Google Cloud and specialized providers like CoreWeave offer streamlined interfaces and reliable SLAs. However, they command premium prices and may have limited capacity during peak demand periods.

In the decentralized space, competitors include Akash Network (AKT), io.net (IO) and Aethir. Each platform approaches GPU marketplace coordination differently - Akash focuses on broader cloud infrastructure, io.net emphasizes AI/ML workloads, Aethir targets gaming and entertainment. Render differentiates through its integration with OTOY's professional rendering software and its established reputation among creative professionals.

The value capture question persists. GPU compute is becoming increasingly commoditized as more providers enter the market. Render must demonstrate that its decentralized model offers clear advantages - cost efficiency, global availability, censorship resistance - that justify using crypto tokens rather than credit cards with centralized providers.

Partnership with major companies provides validation. Ari Emanuel (Co-CEO of Endeavor) has publicly supported Render Network, signing deals with Disney, HBO, Facebook and Unity. These partnerships signal mainstream recognition, though converting relationships into consistent network usage remains the challenge.

On November 12, 2025, RENDER traded around $4.50-5.00, with a market cap near $2.5-3 billion. The token experienced significant growth in 2024, rising over 13,300% from its initial price by early 2024, though it has since consolidated. Analysts attribute this to AI and GPU/NVIDIA narratives, with the Apple partnership providing additional credibility.

Risks include competition from centralized providers scaling more efficiently, potential hardware centralization as mining economics favor large operators, and the question of whether decentralized GPU marketplaces achieve sustainable adoption or remain niche solutions.

Comparative Analysis: Utility Tokens vs Store-of-Value Tokens

AI-utility tokens operate under fundamentally different value propositions than store-of-value tokens like Bitcoin and Ethereum. Understanding these distinctions illuminates both opportunities and challenges facing the utility token category.

Purpose and Demand Drivers

Bitcoin's value derives primarily from its positioning as digital gold - a scarce, decentralized store of value and hedge against monetary inflation. Bitcoin's 21 million supply cap and market cap exceeding $2 trillion position it as a macro asset class. Ethereum adds programmability, deriving value from serving as a settlement layer for DeFi protocols, NFTs and other applications, with demand for ETH coming from gas fees and staking requirements.

Utility tokens like TAO, FET and RENDER instead derive value from network usage. Demand theoretically correlates with computational jobs processed, agents deployed and rendering tasks completed. More AI models trained on Bittensor should increase TAO demand for accessing intelligence. More autonomous agents on Fetch.ai should drive FET transactions. More rendering jobs should burn more RENDER tokens.

Tokenomics and Governance

Store-of-value tokens emphasize scarcity. Bitcoin's fixed supply and halving cycles create predictable issuance reduction. Ethereum transitioned to Proof-of-Stake with EIP-1559 burning transaction fees, introducing deflationary pressure when network usage is high.

Utility tokens employ varied approaches. Bittensor mimics Bitcoin's halving model, creating scarcity. Render's Burn-and-Mint Equilibrium ties supply to usage - high demand burns more tokens than are minted, reducing supply. Fetch.ai maintains a fixed supply but relies on staking incentives to reduce velocity.

Governance differs significantly. Bitcoin maintains a conservative development approach with minimal protocol changes. Ethereum uses off-chain coordination and eventual rough consensus. Utility tokens often implement direct on-chain governance where token holders vote on protocol upgrades, funding proposals and parameter adjustments, giving communities more active stewardship.

Adoption Paths and User Base

Store-of-value tokens target investors seeking exposure to crypto assets or hedging against traditional finance. Bitcoin appeals to those believing in sound money principles. Ethereum attracts developers and users interacting with DeFi and Web3 applications.

Utility tokens must attract specific user types. Bittensor needs AI researchers and data scientists choosing decentralized model training over established frameworks. Fetch.ai requires developers building autonomous agents for real-world applications. Render needs creative professionals trusting decentralized infrastructure for production workflows.

These adoption hurdles are steeper. Developers face switching costs from existing tools. Enterprises require reliability and support that nascent decentralized networks may struggle to provide. Utility tokens must demonstrate clear advantages - cost, performance, features - to overcome inertia.

Value Capture Mechanisms

Store-of-value tokens capture value through scarcity and network effects. As more participants recognize Bitcoin as a store of value, demand increases while supply remains fixed, pushing prices higher. This speculative loop reinforces itself, though it also creates volatility.

Utility tokens face the velocity problem. If users immediately convert earned tokens into fiat or other crypto, high velocity prevents value accumulation. The Equation of Exchange (M×V = P×Q) suggests that for a given transaction volume (P×Q), higher velocity (V) means lower market cap (M).

Protocols mitigate velocity through several mechanisms. Staking requirements lock tokens, reducing circulating supply. Bittensor requires validators to stake TAO. Fetch.ai rewards stakers with network fees. Burn mechanisms like Render's remove tokens from circulation permanently. Governance rights create incentives to hold tokens for voting power.

Market Performance and Trajectories

Bitcoin hit all-time highs above $126,000 in 2025, continuing its trajectory as a macro asset. Ethereum recovered from post-2022 drawdowns, maintaining its position as the primary smart contract platform.

AI-utility tokens showed more volatile performance. TAO traded between $200-$750 in 2024-2025, with market cap reaching $3.7-4.1 billion at peaks. FET experienced significant moves, particularly around the Artificial Superintelligence Alliance announcement. RENDER saw explosive growth in 2023-2024 before consolidating.

These tokens trade on both speculation and fundamentals. When AI narratives dominate crypto discourse, utility tokens outperform. During downturns, they often underperform Bitcoin and Ethereum as investors flee to perceived safer assets.

Coexistence or Competition?

The question is whether utility tokens represent the "next wave" or coexist as a complementary category. Evidence suggests coexistence is more likely. Store-of-value tokens serve different purposes than operational tokens. Bitcoin functions as digital gold, Ethereum as programmable settlement layer, while utility tokens act as fuel for specific applications.

However, success is not guaranteed. Most utility tokens may fail if usage doesn't materialize or if centralized alternatives prove superior. The AI-crypto market cap reached $24-27 billion in 2025, substantial but small compared to Bitcoin alone exceeding $2 trillion.

Winners will likely demonstrate:

- Sustained network usage growing independent of speculation

- Clear advantages over centralized alternatives

- Strong developer ecosystems and enterprise adoption

- Effective velocity mitigation through staking or burning

- Governance models that balance decentralization with efficiency

The ultimate test is whether utility tokens become infrastructure for AI workloads at scale, or whether they remain niche solutions overshadowed by centralized cloud providers.

Valuation, Adoption Metrics & Narrative Risk

Evaluating utility tokens requires different frameworks than assessing store-of-value assets. While Bitcoin can be valued through stock-to-flow models or as digital gold comparable to precious metals, utility tokens demand usage-based metrics.

Key Metrics for Utility Tokens

Network usage statistics provide the foundation. For Bittensor, meaningful metrics include:

- Number of active subnets and their specializations

- Compute hours dedicated to model training

- Count of miners and validators securing the network

- Transaction volume flowing through the protocol

- Successful model deployments serving real applications

Bittensor reports 128 active subnets as of late 2025, a substantial increase from earlier periods. However, assessing whether these subnets generate genuine demand versus speculative activity requires deeper investigation.

For Fetch.ai, relevant metrics include:

- Number of deployed autonomous agents

- Agent-to-agent interactions and transaction volume

- Real-world integrations across industries

- Partnerships with enterprises or governments

- Staking participation and validator counts

Fetch.ai demonstrated proof-of-concepts in parking coordination, energy trading and logistics, but scaling from pilots to widespread adoption remains the challenge.

For Render Network, critical indicators are:

- Rendering jobs processed monthly

- Number of active node operators providing GPU capacity

- Enterprise clients using the network for production workflows

- Burn rate compared to mint rate under Burn-and-Mint Equilibrium

- GPU hours utilized across the decentralized network

Render has secured major studio partnerships and processes real rendering workloads, providing more concrete usage evidence than many utility tokens.

Token Velocity and Burn Metrics

Token velocity measures how quickly tokens circulate through the economy. High velocity indicates users immediately spending or converting tokens, preventing value accumulation. Low velocity suggests tokens are held longer, potentially as store of value or for staking rewards.

Bitcoin demonstrates velocity of 4.1%, Ethereum 3.6%, indicating mature assets held predominantly rather than transacted. Utility tokens typically show higher velocities initially, as users receive tokens for work and immediately convert to stable currencies.

Burn mechanisms combat high velocity. Render's system burns 95% of payment tokens with each transaction, removing supply. If burn rate exceeds mint rate, circulating supply decreases, potentially supporting price appreciation if demand remains constant.

Evaluating burns requires transparency. Projects should publish regular burn reports showing tokens removed from circulation. Render provides this data, enabling independent verification of deflationary claims.

Real-World Partnerships and Integrations

Enterprise adoption signals genuine utility. Bittensor's first ETP launch on SIX Swiss Exchange provides institutional access. Interactive Strength's $500 million FET treasury demonstrates corporate confidence. Render's partnerships with Disney, HBO and Unity validate the platform's capabilities for production workflows.

However, partnerships alone don't guarantee sustained usage. Many blockchain projects announce partnerships that fail to materialize into significant revenue or network activity. Tracking actual transaction volume stemming from enterprise relationships provides clearer insight.

Narrative Risks

Several narrative risks threaten utility tokens' valuations:

AI + Crypto Hype Without Delivery: The convergence of AI and blockchain creates powerful narratives, but if decentralized AI systems fail to match centralized alternatives' performance, valuations deflate. Most experts expect only select AI-crypto projects to succeed long-term, with many remaining speculative.

Compute Without Demand: Building decentralized GPU infrastructure is meaningless if developers don't use it. If usage fails to scale beyond early adopters and evangelists, tokens become solutions seeking problems. The question is whether decentralized compute can capture meaningful market share from AWS, Google Cloud and other centralized giants.

Regulatory Threats: Governments worldwide are developing AI regulations. The EU AI Act's risk-based framework may classify certain AI systems as high-risk, requiring audits and oversight. Autonomous agents making economic decisions could face scrutiny. Uncertainty around whether utility tokens constitute securities adds regulatory risk.

Hardware Centralization: Decentralized networks risk recentralization. If mining or node operation becomes economically viable only for large players with economies of scale, the decentralization promise fades. GPU networks could consolidate around major data centers, defeating the purpose of peer-to-peer infrastructure.

Technical Limitations: Decentralized systems face inherent tradeoffs. Coordination overhead, latency and reliability concerns may prevent utility tokens from competing with optimized centralized alternatives. If technical limitations prove insurmountable, adoption stalls.

Valuation Frameworks

Traditional financial models struggle with utility tokens. Discounted cash flow (DCF) works for tokens with profit-sharing—Augur pays REP holders for network work, creating cash flow streams amenable to DCF analysis. But pure utility tokens without dividends lack obvious cash flows to discount.

The Equation of Exchange offers one approach: M×V = P×Q, where M is market cap (what we're solving for), V is velocity, P is price per transaction, and Q is quantity of transactions. Rearranging: M = P×Q / V. This implies market cap equals transaction volume divided by velocity.

Higher transaction volume (P×Q) supports higher valuations. Lower velocity (V) also supports higher valuations. Projects must either increase usage or decrease velocity - ideally both. Staking reduces velocity; burn mechanisms reduce supply; real utility increases transaction volume.

Metcalfe's Law suggests network value grows proportional to the square of users. As more participants join Bittensor, Fetch.ai or Render, network effects could drive exponential value growth. However, this law assumes all connections are valuable - not always true for early-stage networks.

Comparative valuation looks at similar projects. If Bittensor achieves similar network usage as SingularityNET or Ocean Protocol, comparing market caps provides rough benchmarks. However, each project's unique tokenomics and use cases limit direct comparison's usefulness.

Ultimately, utility token valuation remains speculative. Until networks demonstrate sustained usage independent of speculation, prices reflect narrative strength and market sentiment as much as fundamental value.

What Comes Next: Scenarios for the Future

The trajectory for AI-utility tokens depends on several uncertain variables: technology adoption rates, regulatory developments, competition from centralized providers, and tokens' ability to capture value from network usage. Three broad scenarios illuminate possible futures.

Best Case: Infrastructure Tokens Become Core Layer

In this optimistic scenario, decentralized AI infrastructure achieves mainstream adoption. Bittensor becomes the preferred platform for collaborative AI model training, attracting major research institutions and enterprises. The subnet architecture proves superior to centralized frameworks for certain use cases - privacy-preserving healthcare AI, decentralized model marketplaces, crowdsourced intelligence.

Fetch.ai's autonomous agents proliferate across industries. Smart cities deploy agent networks for traffic coordination, energy distribution and public services. Supply chains standardize on agent-based optimization. DeFi protocols integrate agents for automated strategy execution. The "agentic economy" materializes as predicted, with billions of micro-transactions coordinated by autonomous software.

Render Network captures significant market share from centralized GPU providers. Creative professionals and AI researchers routinely use decentralized compute for production workflows. The global cloud gaming market projected to reach $121 billion by 2032 drives demand for distributed GPU infrastructure.

In this scenario, utility tokens gain lasting value through:

- Sustained usage growth: Network activity increases independent of speculation

- Velocity mitigation: Staking, burning and governance incentives keep tokens held rather than immediately sold

- Network effects: As more users join, platforms become more valuable to all participants

- Regulatory clarity: Frameworks emerge that accommodate decentralized AI while protecting consumers

Token prices could reach optimistic analyst projections - TAO exceeding $1,000, FET approaching $6-10, RENDER surpassing $20 - if usage fundamentals justify valuations. Market caps would grow proportionally, with leading AI-utility tokens potentially reaching $20-50 billion valuations as they capture portions of trillion-dollar AI and cloud computing markets.

For investors, this represents significant appreciation from current levels. For developers, it validates decentralized infrastructure as viable alternative to centralized cloud providers. For crypto markets, it proves that utility tokens can evolve beyond speculation into functional infrastructure assets.

Baseline: Select Tokens Succeed, Many Plateau

A more realistic scenario acknowledges that only a subset of current AI-utility tokens will achieve sustained adoption. Winners distinguish themselves through superior technology, strong ecosystems, real partnerships and effective value capture mechanisms. Most projects plateau or fade as users recognize limited practical utility.

In this scenario, Bittensor, Fetch.ai and Render - as leading projects - have better chances than smaller competitors. However, even these face challenges. Decentralized AI proves valuable for specific niches—privacy-critical applications, censorship-resistant networks, certain research domains—but fails to displace centralized providers for most use cases.

Store-of-value tokens remain dominant. Bitcoin solidifies its position as digital gold. Ethereum continues serving as primary settlement layer for decentralized applications. AI-utility tokens coexist as infrastructure for specialized applications rather than general-purpose platforms.

Token prices reflect modest usage growth. TAO might reach $500-800, FET $2-4, RENDER $8-12 over coming years - meaningful appreciation but far from explosive forecasts. Market caps grow but remain orders of magnitude below Bitcoin and Ethereum.

Several factors characterize this baseline:

- Niche adoption: Utility tokens serve specific verticals or use cases effectively

- Centralized competition: AWS, Google Cloud and other giants maintain dominance for general compute

- Regulatory overhead: Compliance requirements add friction to decentralized platforms

- Technical tradeoffs: Decentralized systems prove slower, more complex or less reliable than centralized alternatives for many applications

For investors, moderate appreciation rewards early supporters but underperforms most bullish projections. For crypto markets, utility tokens establish legitimacy as an asset category distinct from store-of-value tokens, but with more moderate valuations.

Downside: Usage Fails to Materialize

The pessimistic scenario sees utility tokens unable to translate technical capabilities into sustained demand. Despite impressive infrastructure, users don't migrate from established platforms. Developers continue using TensorFlow, PyTorch and centralized cloud compute rather than learning new decentralized protocols. Creative professionals stick with Adobe, Autodesk and traditional render farms instead of experimenting with crypto-enabled alternatives.

In this scenario, AI-utility tokens become primarily speculative assets. Prices fluctuate based on broader crypto market sentiment and AI hype cycles rather than fundamental usage. When narratives fade - as they did for many 2017-2018 ICO tokens - valuations collapse.

Several dynamics could produce this outcome:

- User experience friction: Managing wallets, paying gas fees and navigating decentralized protocols proves too cumbersome for mainstream users

- Performance gaps: Centralized alternatives remain faster, more reliable and more feature-rich than decentralized options

- Economic viability: Token economics fail to align incentives properly, leading to provider churn, quality issues or network instability

- Regulatory crackdowns: Governments classify utility tokens as securities or ban certain applications, limiting legal usage

Token prices would revert to speculative lows. TAO might drop below $200, FET below $0.50, RENDER below $3 as investors recognize lack of fundamental demand. Projects might survive with dedicated communities but fail to achieve meaningful scale.

This scenario represents existential risk for utility token category. If leading projects with substantial funding, talented teams and real partnerships can't demonstrate product-market fit, it suggests the decentralized AI/compute model fundamentally doesn't work at scale.

Implications Across Scenarios

For Investors: Risk-reward profiles vary dramatically across scenarios. Best case offers multi-bagger returns but requires several uncertainties resolving favorably. Baseline provides modest appreciation with lower risk. Downside means significant losses.

Portfolio construction should account for scenario probabilities. Allocating small percentages to utility tokens provides asymmetric upside if best case materializes while limiting downside exposure. Concentrating in utility tokens over store-of-value assets increases volatility and risk.

For Developers: Building on utility token platforms requires assessing long-term viability. If baseline or downside scenarios materialize, applications built on these platforms may struggle to find users or funding. Developers should maintain optionality—designing applications portable across platforms or capable of operating with centralized backends if decentralized infrastructure proves inadequate.

For Crypto Market Structure: The success or failure of utility tokens shapes crypto's evolution. If best case unfolds, crypto expands beyond store-of-value and DeFi into real infrastructure. If downside happens, crypto remains primarily a speculative and financial domain.

What to Watch

Several indicators will clarify which scenario unfolds:

Node Counts and Participation: Growing numbers of miners, validators and GPU providers signal genuine network effects. Stagnant or declining participation suggests lack of economic viability.

Compute Jobs Processed: Actual rendering jobs, AI training runs and agent interactions - not just testnet activity - demonstrate real demand. Projects should publish transparent usage statistics.

Enterprise Partnerships: Converting announced partnerships into measurable transaction volume validates business models. Partnerships without accompanying usage indicate potential vaporware.

Token Burns and Staking: For projects with burn mechanisms, burn rate exceeding mint rate indicates strong demand. High staking participation reduces velocity and shows long-term holder confidence.

Developer Activity: Growing developer ecosystems - measured by GitHub commits, new protocols built atop platforms, hackathon participation—signal healthy foundations. Declining developer interest presages stagnation.

Regulatory Clarity: Clearer frameworks around utility tokens, AI systems and decentralized infrastructure reduce uncertainty. Favorable regulations accelerate adoption; restrictive ones impede it.

Hardware Ecosystems: Integration with major GPU manufacturers or cloud providers legitimizes decentralized compute. Nvidia, AMD and others partnering with or acknowledging utility token platforms would signal mainstream validation.

Tracking these metrics over 2025-2027 will clarify whether AI-utility tokens represent genuine infrastructure innovation or primarily speculative vehicles. The distinction will determine whether these assets achieve lasting significance in crypto markets or fade as another narrative cycle concludes.

Final thoughts

AI-utility tokens represent a meaningful evolution in crypto's architectural narrative. Bittensor, Fetch.ai and Render Network demonstrate that tokens can serve purposes beyond store-of-value or speculative trading - they can coordinate decentralized infrastructure, incentivize computational work and enable machine-to-machine economies.

The fundamental thesis is compelling. Decentralized GPU networks aggregate underutilized resources, reducing costs and democratizing access. Autonomous agents enable coordination at scales impractical for human mediation. Collaborative AI development distributes intelligence creation beyond tech giants' monopolies. These visions address real problems in infrastructure scalability, AI accessibility and economic coordination.

However, translating vision into sustained adoption remains the critical challenge. Utility tokens must demonstrate clear advantages over centralized alternatives while overcoming friction inherent to decentralized systems. They must capture value through usage rather than speculation, solve the velocity problem through effective tokenomics, and achieve product-market fit with enterprises and developers.

The shift from store-of-value to utility tokens matters for crypto's next phase. If successful, utility tokens prove that crypto enables functional infrastructure, not just financial assets. This expands total addressable market significantly - from investors seeking exposure to digital gold or DeFi yields, to developers needing compute resources and enterprises optimizing operations.

Evidence remains mixed. Real usage exists - Render processes production rendering jobs, Fetch.ai deployed pilots across industries, Bittensor operates active AI subnets. Yet usage scales remain small relative to valuations. Market caps in billions price in substantial future growth that may or may not materialize.

The coming years will determine which scenario unfolds. Will decentralized AI infrastructure capture meaningful portions of trillion-dollar markets? Will autonomous agent economies proliferate beyond niche applications? Or will centralized alternatives' advantages in performance, reliability and user experience prove insurmountable?

For investors and developers, tracking usage and infrastructure growth separates genuine winners from narrative-only projects. Node operator counts, compute jobs processed, token burn rates, enterprise partnerships and developer ecosystems provide signal amid speculation.

The most important realization is that utility tokens face fundamentally different challenges than store-of-value assets. Bitcoin succeeded by being scarce and secure - adoption meant convincing people to hold it. Utility tokens must be used - adoption means convincing developers to build on them and enterprises to integrate them into production workflows. This is a higher bar, but also potentially more impactful if achieved.

As crypto markets mature beyond pure speculation toward functional infrastructure, AI-utility tokens will either validate this evolution or serve as cautionary tales about overpromising and underdelivering. The technology exists, the vision is articulated, and capital is available. What remains uncertain is whether demand at scale will materialize - or whether, once again, crypto has built infrastructure waiting for users who may never arrive.